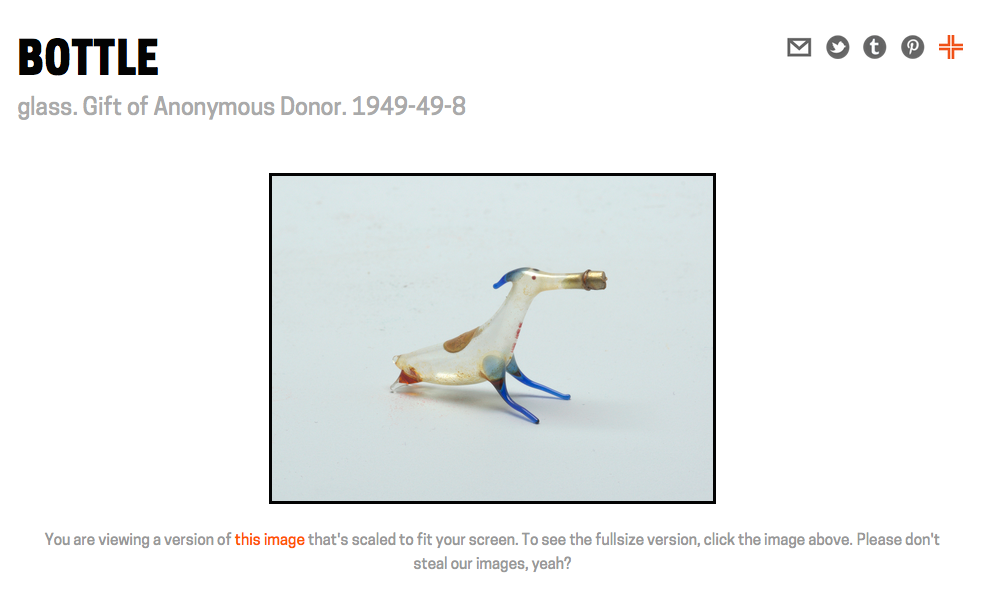

You can now collect any object in the collection, or on display, from the collections website itself. Just like in the galleries there is a small “collect” icon on the top right-hand side of every object page on the collections website. It’s not just individual object pages but also all the object list pages, too. So many “collect” icons!

![]()

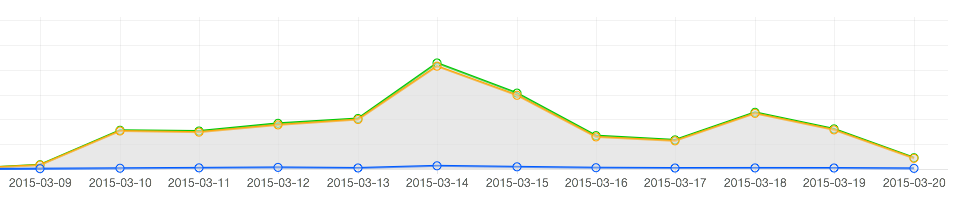

![]() Objects that haven’t been collected yet have a grey icon.

Objects that haven’t been collected yet have a grey icon.

![]() Objects that have been collected in the galleries, as part of a visit to the museum, have a pink icon.

Objects that have been collected in the galleries, as part of a visit to the museum, have a pink icon.

![]() Objects that that have been collected on the collections website have an orange icon.

Objects that that have been collected on the collections website have an orange icon.

Simply click the grey icon to collect an object or click one of the orange or pink icons to remove or un-collect that object.

That’s it!

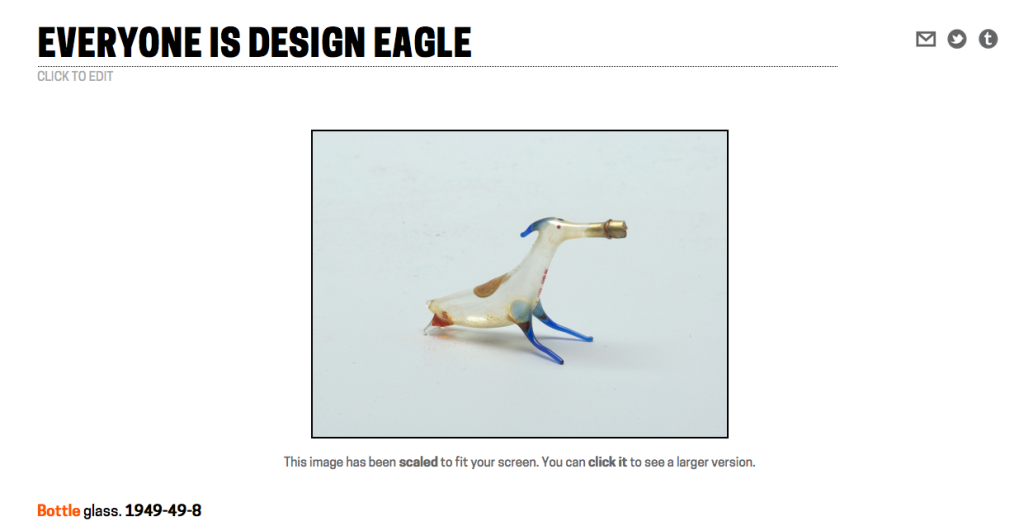

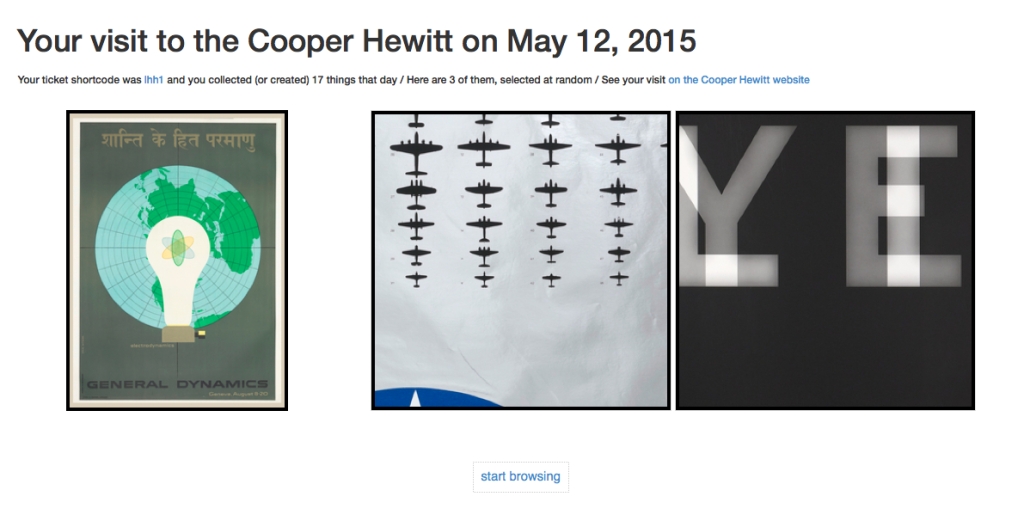

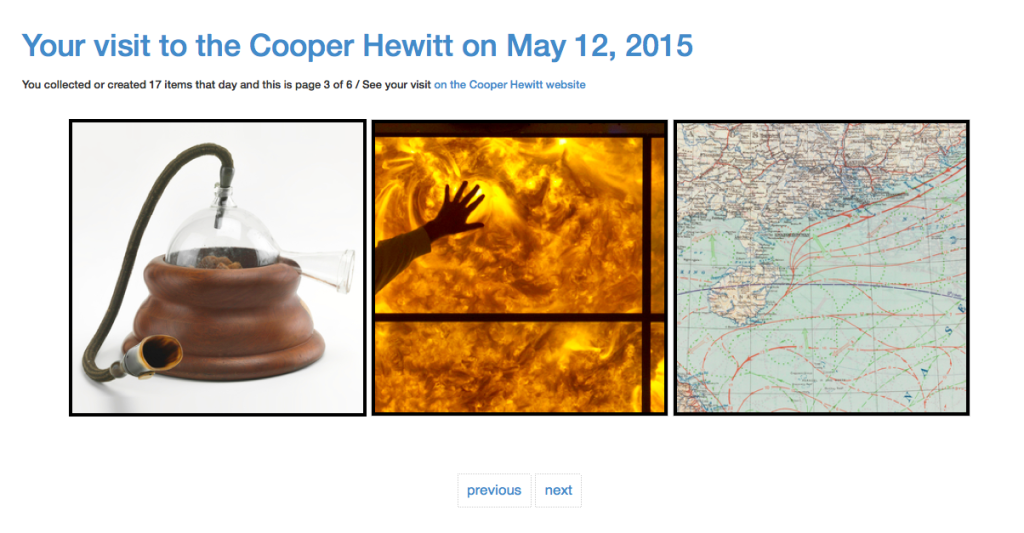

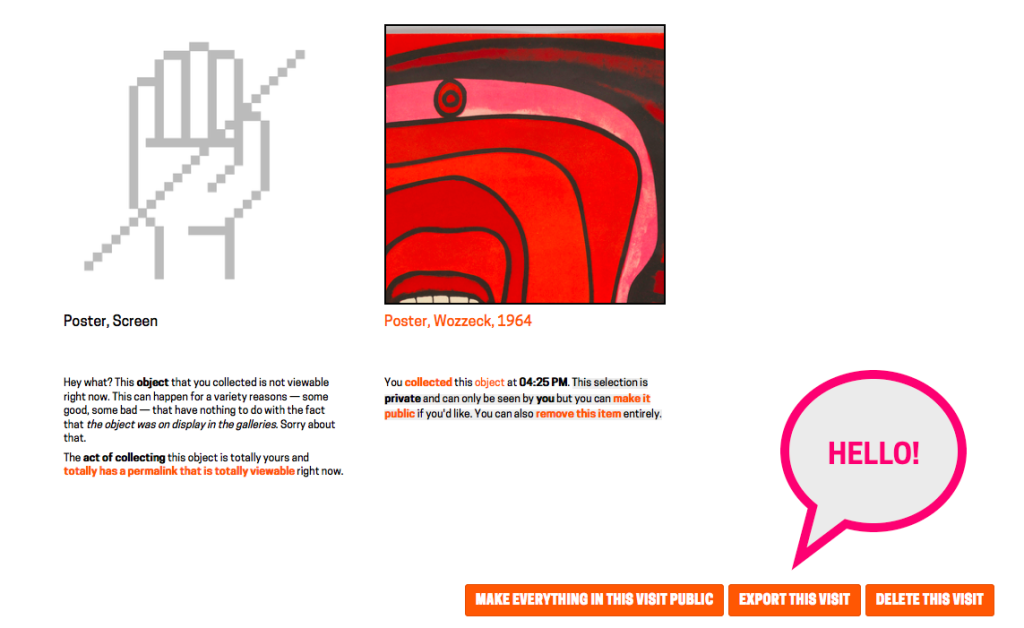

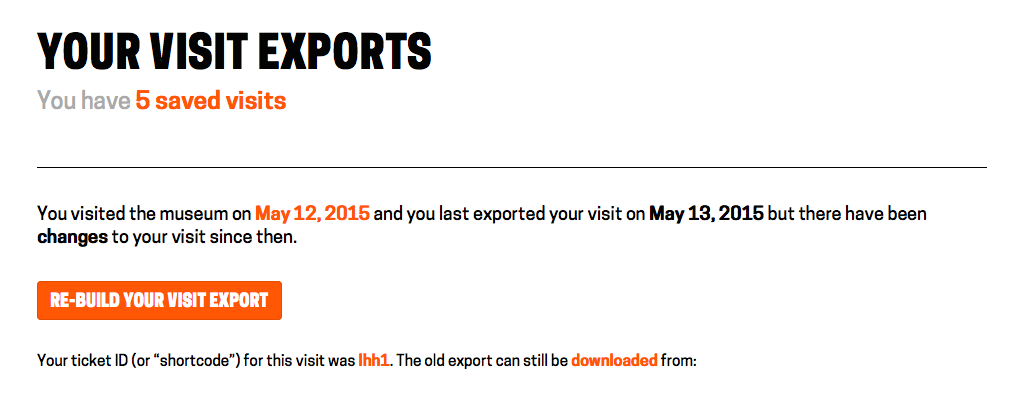

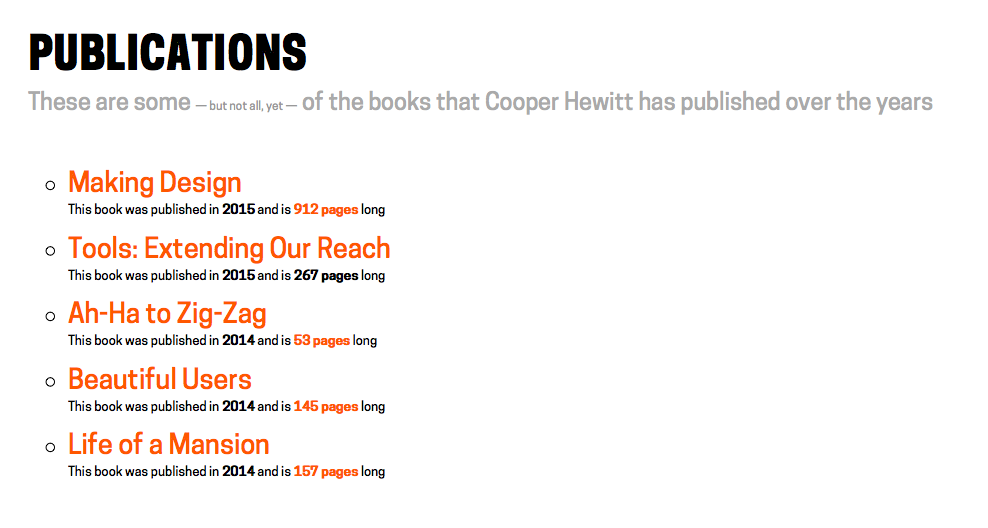

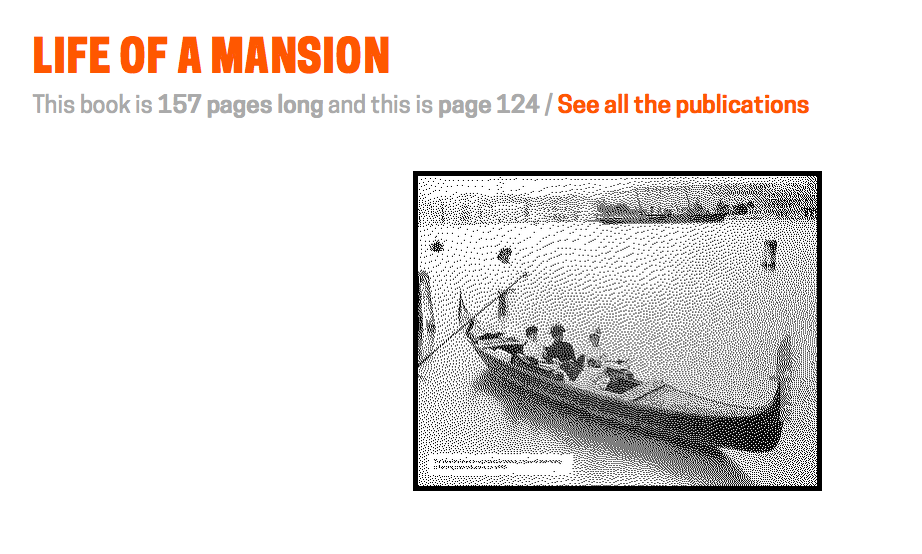

Just like visit items, things you collect on the website have a permanent URL that can be made public to share with other people and can be given a bespoke title or description. Objects that you collect on the collections website live in something we’re calling the “shoebox”.

You can get your to shoebox by visiting https://collection.cooperhewitt.org/users/YOUR-USERNAME/shoebox or if you’re already logged in to your Cooper Hewitt account by visiting https://collection.cooperhewitt.org/you/shoebox/.

There is also a handy link in the Your stuff menu, located at the top-left of every page on the collections website.

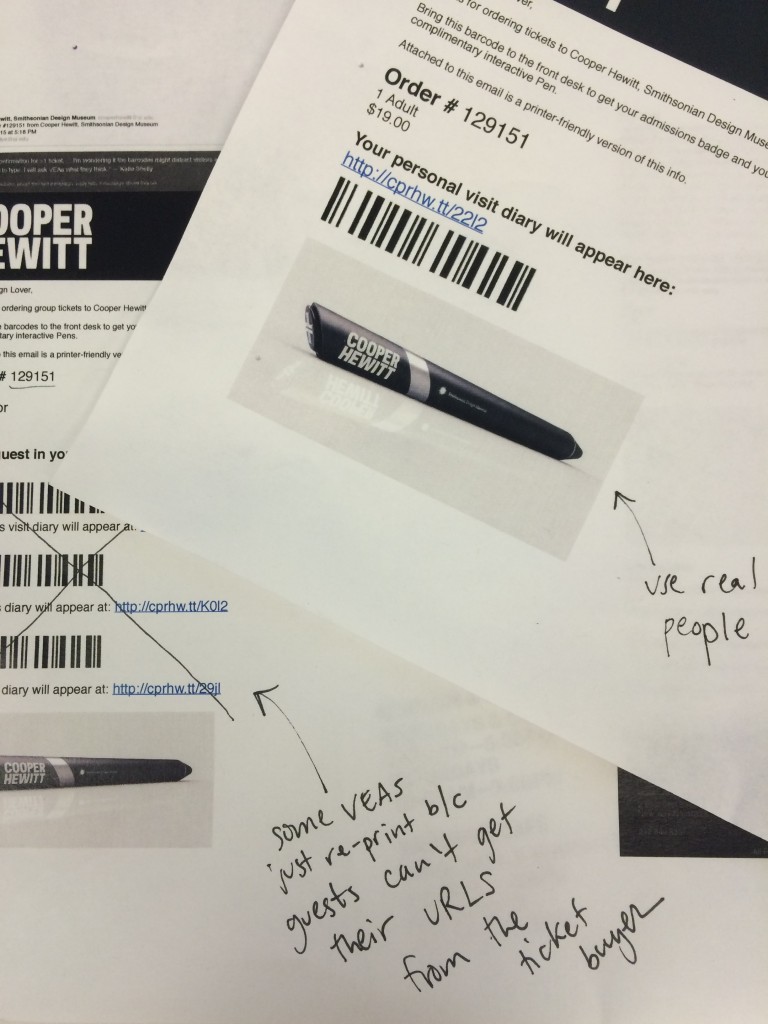

The shoebox is the set of all the objects you’ve collected (or created) on the website or during your visits to the museum. Although visits and visit items overlap with things in your shoebox we still treat them differently because although you need to be logged in to you Cooper Hewitt account to add things to your shoebox a visit to the museum can be entirely anonymous if a visitor so chooses.

The default view for the shoebox is to display everything together in reverse-chronological order but you can filter the view to show only things collected online or things collected during a visit. You can also see the set of all the objects you’ve made public or private.

logged out view (large version)

logged in view (large version)

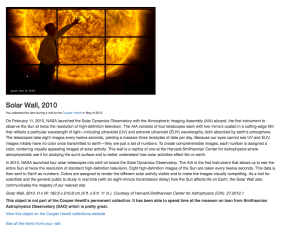

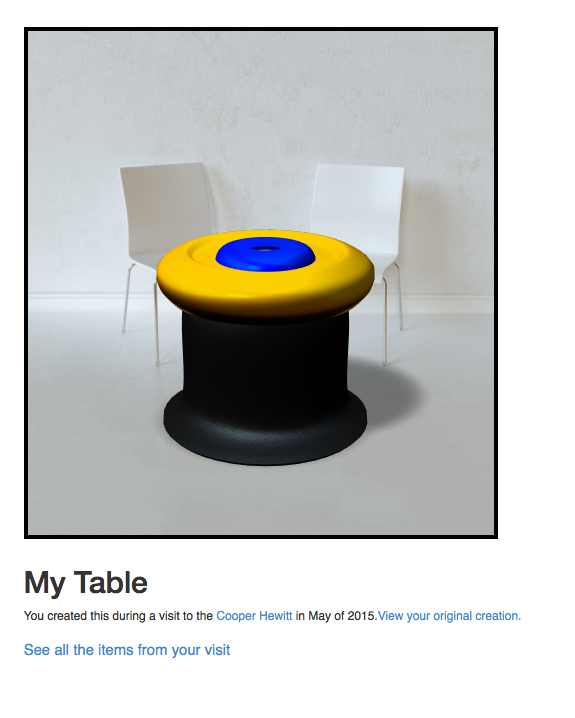

But it’s not just objects, either. You can already collect videos during your museum visit so those are included too. Ultimately the only limit to what you might collect with the Pen is time-and-typing. Things we’re thinking about making collect-able include: entire exhibitions or the introductory texts on the wall for an exhibition or people or individual rooms in the Mansion.

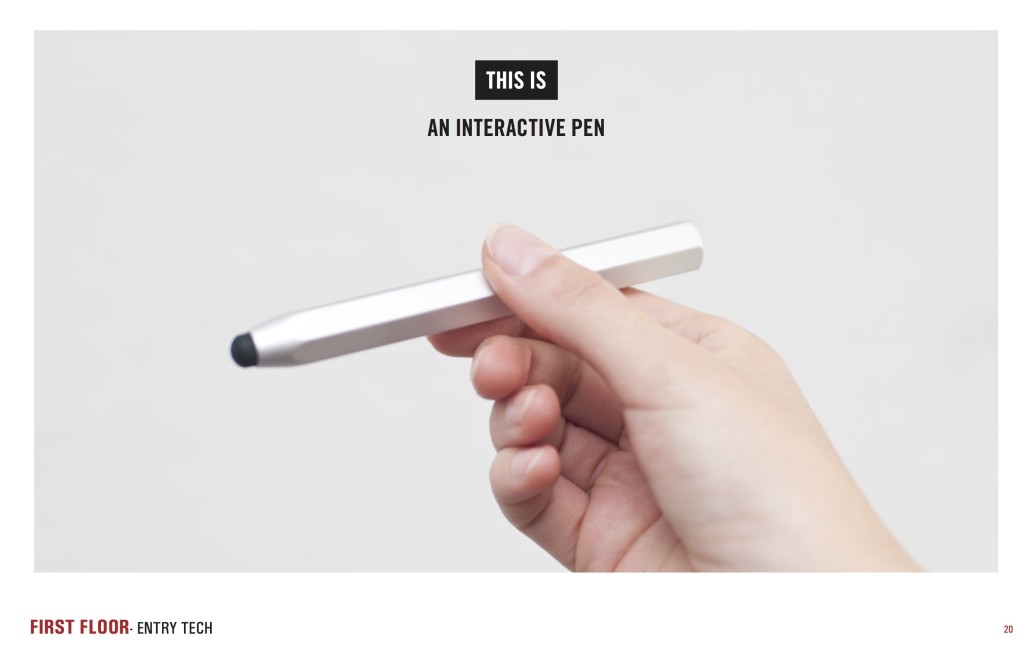

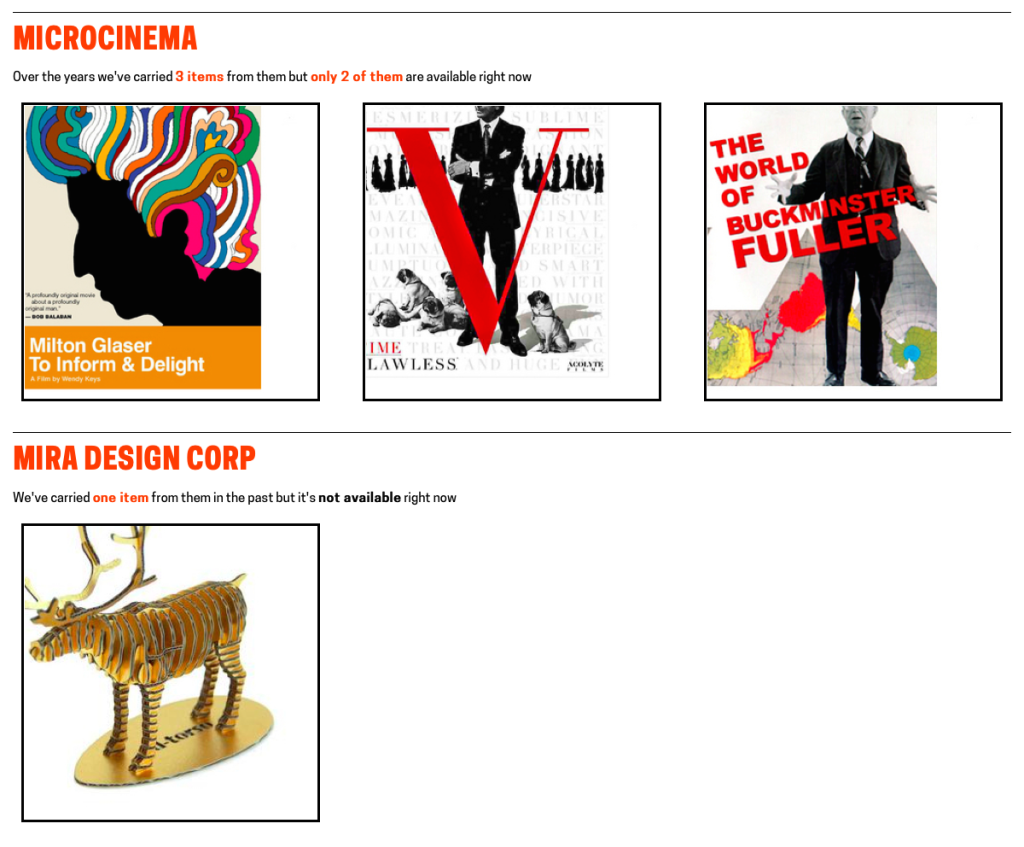

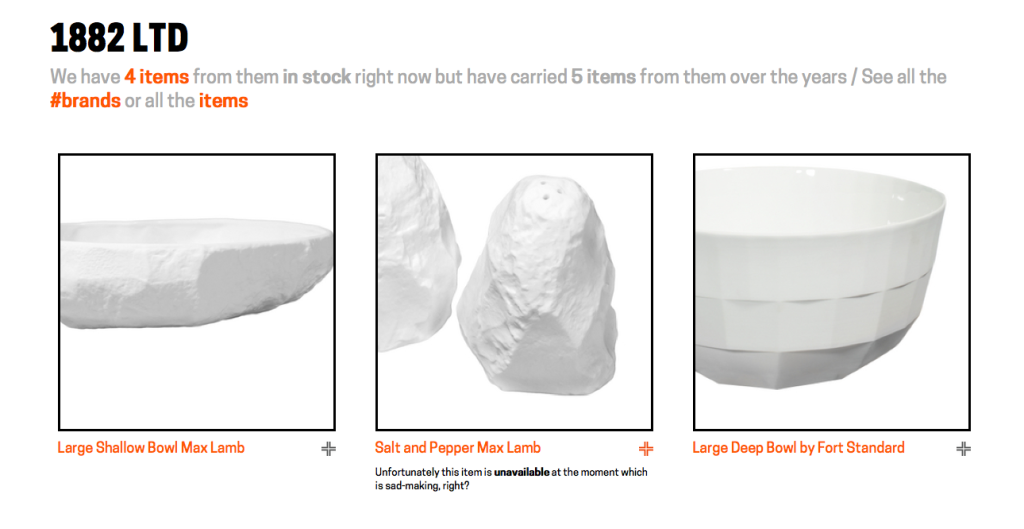

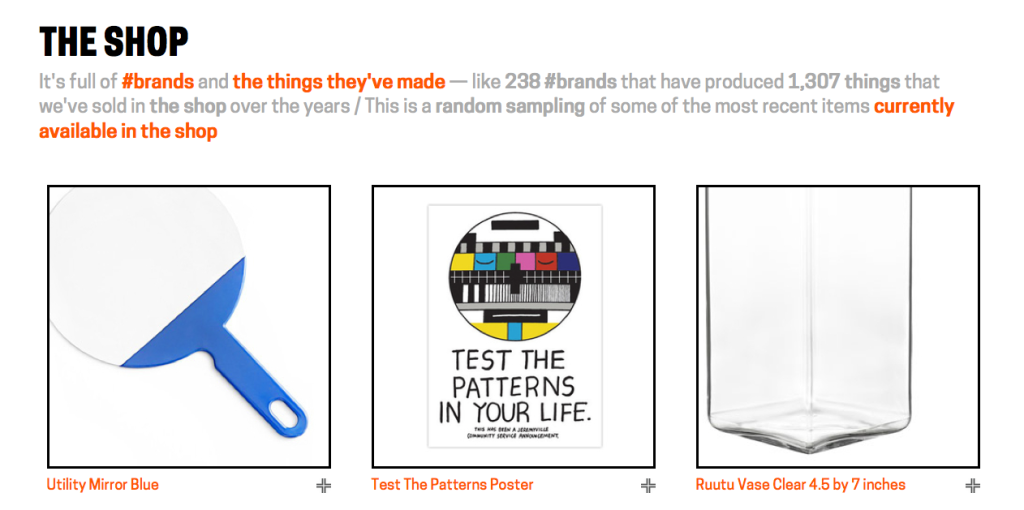

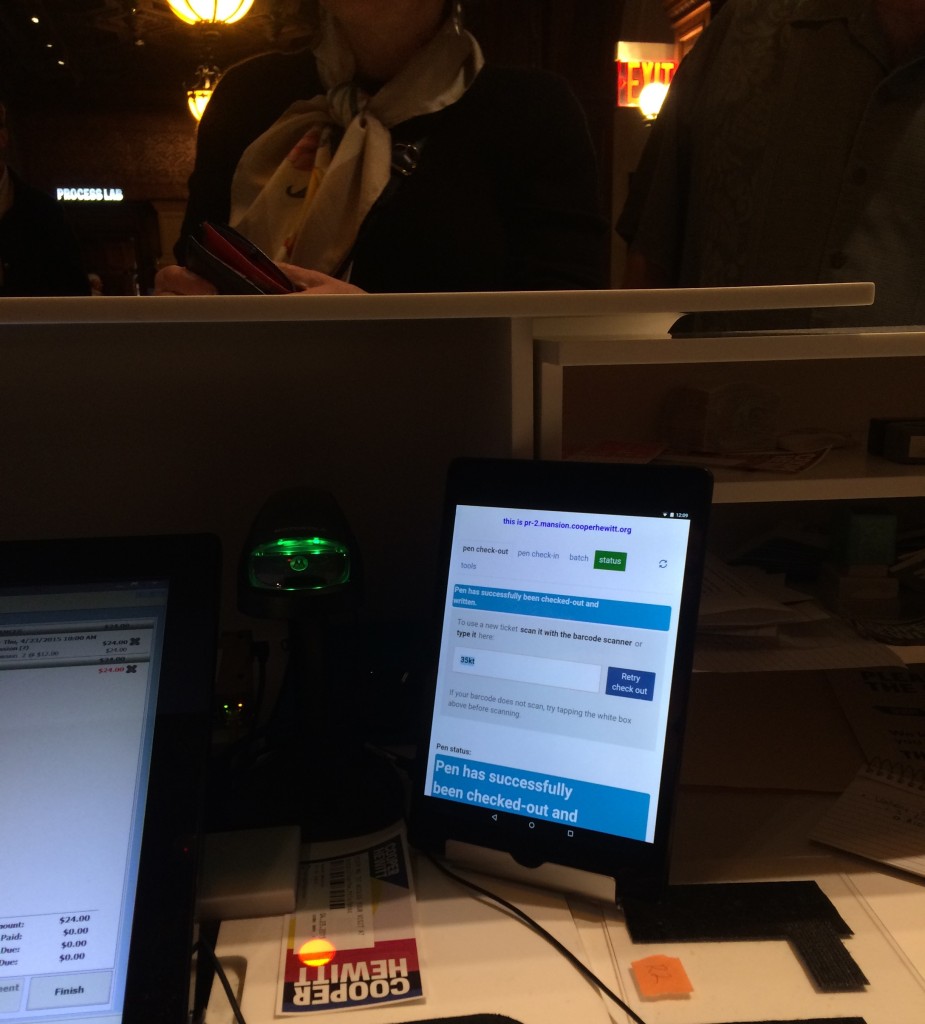

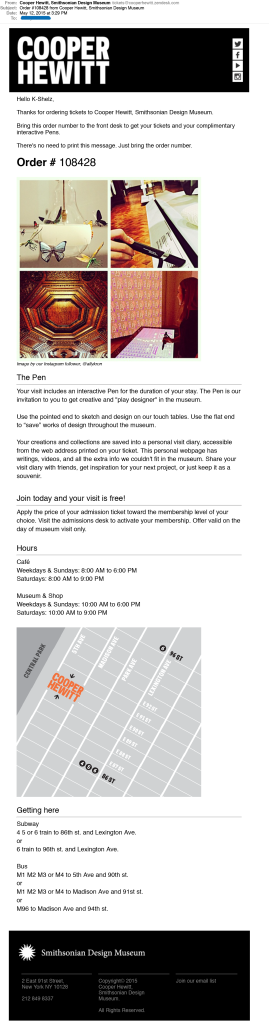

Museum retail

We’ve started this process by allowing you to collect things in the museum Shop.

By “things in the Shop” we mean all the things that have ever been sold in the Shop over the years. And by “all the things” we mean almost all the things. There is some technical hoop-jumping related to inventory management systems and that is why we don’t have everything yet but we’ll get there in time.

We are a captial-D design museum with a capital-D design shop and many of the things that have been available in the Shop have gone on to become part of our permanent collection so it only makes sense to give them a home on the collections website. In fact MoMA already does similarly with their “find related products in the MoMA Store” feature though ours is a bit different.

You can see for yourself at https://collection.cooperhewitt.org/shop

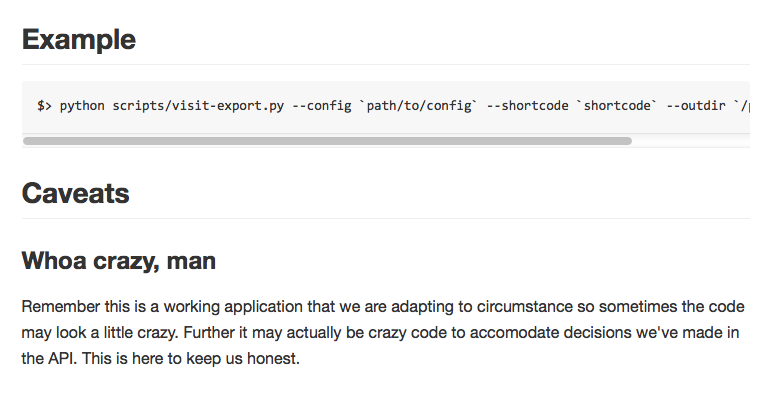

The /shop section is divided in two parts: Brands and Items (and all the items for a given brand of course). There isn’t a whole lot of extra information beyond titles and links to the SHOP Cooper Hewitt website for those items that are currently in-stock but it’s a start. Like the rest of the collections website we’ve started with the idea that providing permanent stable URLs that people can have confidence we create something that can be improved on over time.

Shop items and brands don’t get updated as regularly as we’d like yet. We are still working through the fiddly details of bridging our systems with the Shop’s ecommerce and POS system and some things still need to be done by hand. We’ve been able to get this far though so we expect things will only get better.

You might be wondering…

You might be reading this and starting to wonder Hmmm… does that mean I can also collect things in the Shop as I walk around the museum with the Pen? the answer is… Yes!

As of this writing there are only one or two items that can be collected with the Pen because the Shop staff are still getting familiar with the tools and thinking about how making collect-able labels changes in their day-to-day workflow. The obvious future of this might be the infamous ‘wedding register’, however we believe that many museum visitors actually would like to bookmark objects to possibly buy later, or just remember as part of their overall visit to the ‘museum campus’.

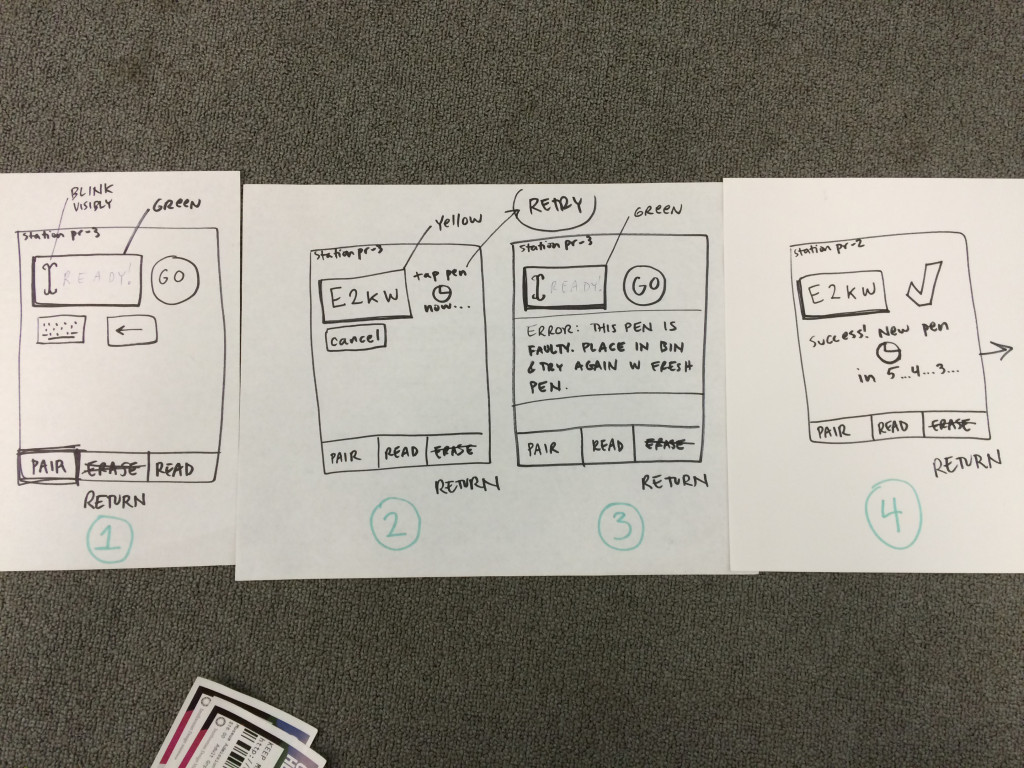

Practically what that has meant are some changes to Sam‘s “tag writer” application (the subject of a future blog post) to fetch shop items via our API and then letting the Shop folks decide what they want to tag and when they want to do it.

There has been a whole lot of change here over the course of the last three years and allowing the various parts of the museum warm up to the possibilities that the Pen starts to afford at their own pace and with not only a minimum of fuss but plenty of wiggle-room for experimentation is really important.

In the meantime we hope that you enjoy collecting at least more, if not all, of the things that make up the museum.

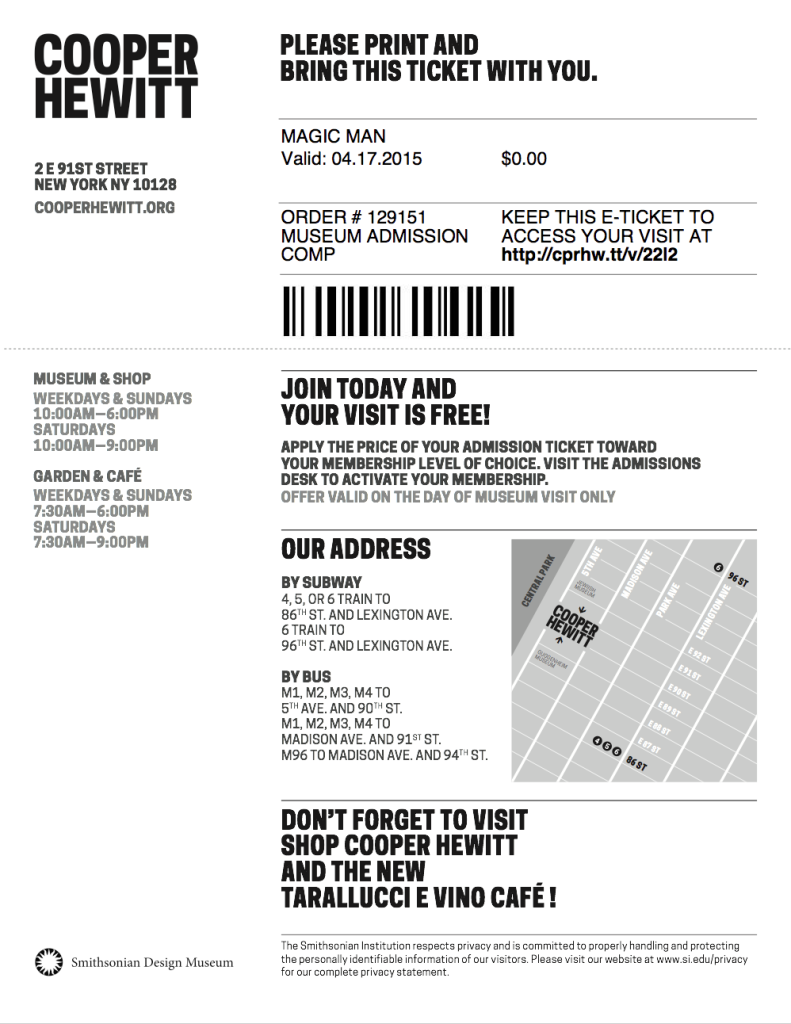

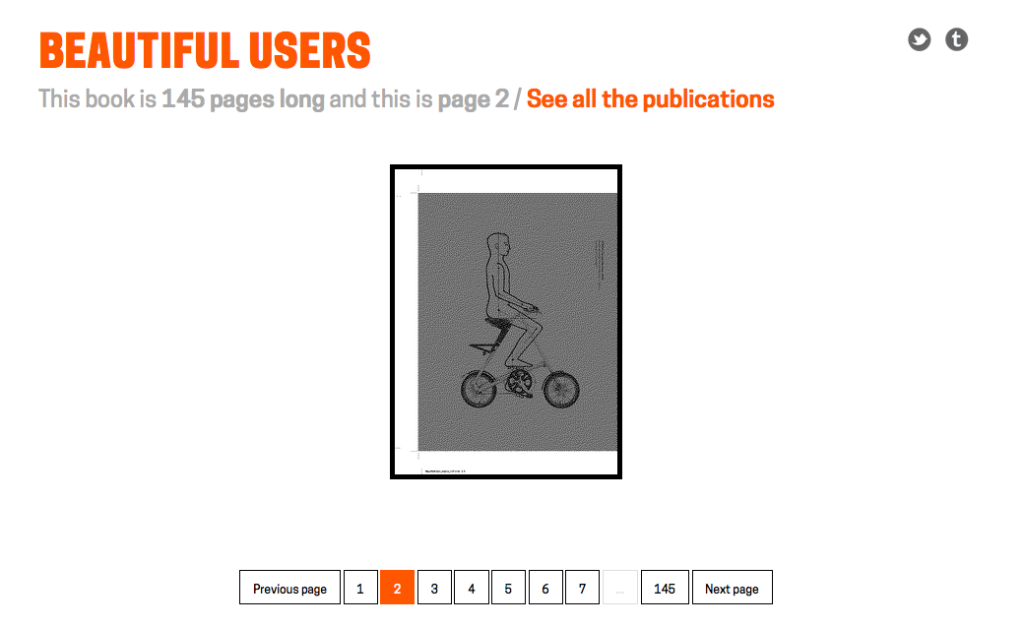

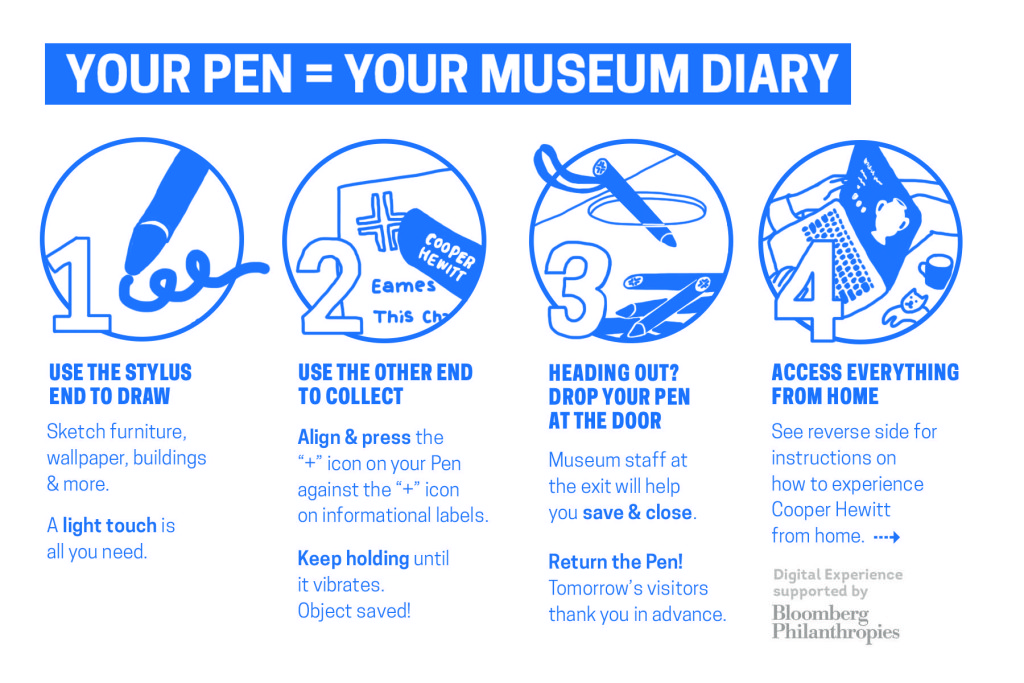

![Single visit 'lifecycle' of The Pen. Illustration by Katie Shelly, 2015. [click to enlarge]](https://www.cooperhewitt.org/wp-content/uploads/sites/2/2015/04/PenCycle_v3-1024x663.png)