The short version is:

There is now an integration between Tessitura & Mailchimp. If you are a Tessitura Licensee, and have access to their BitBucket account, you can get it here.

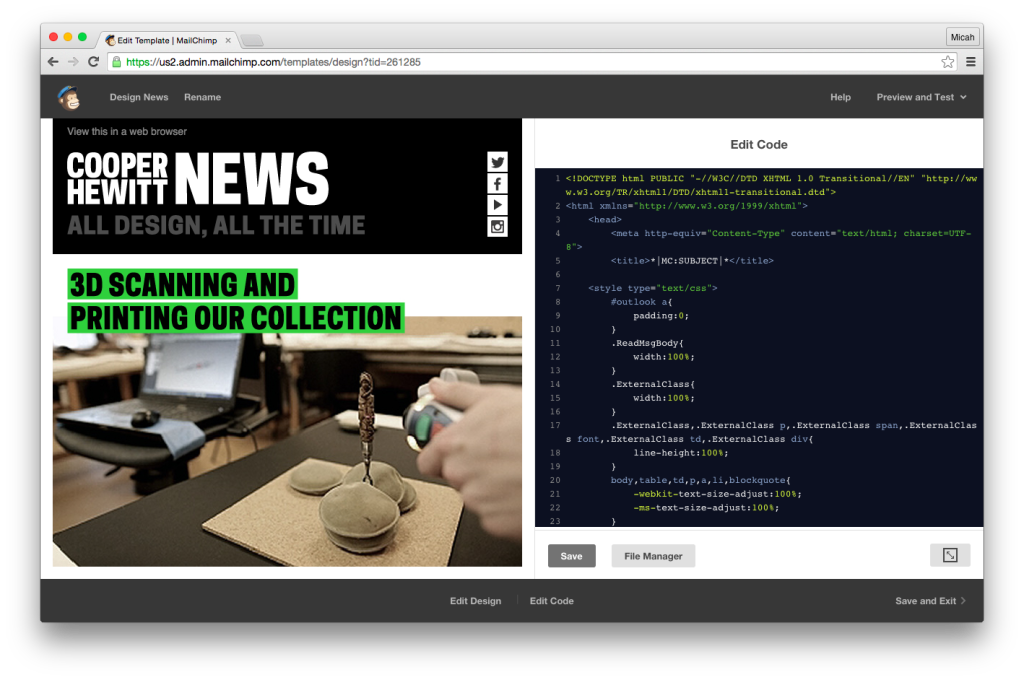

So you can use this lovely Mailchimp Interface to create your emails…

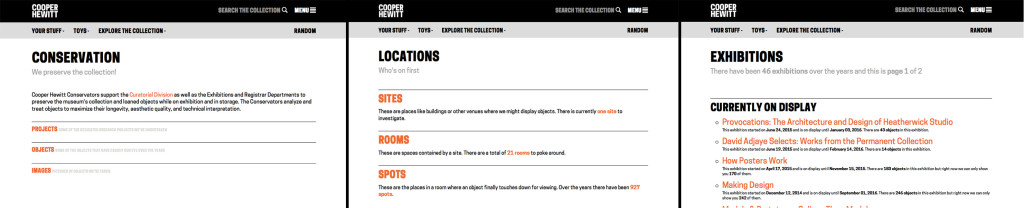

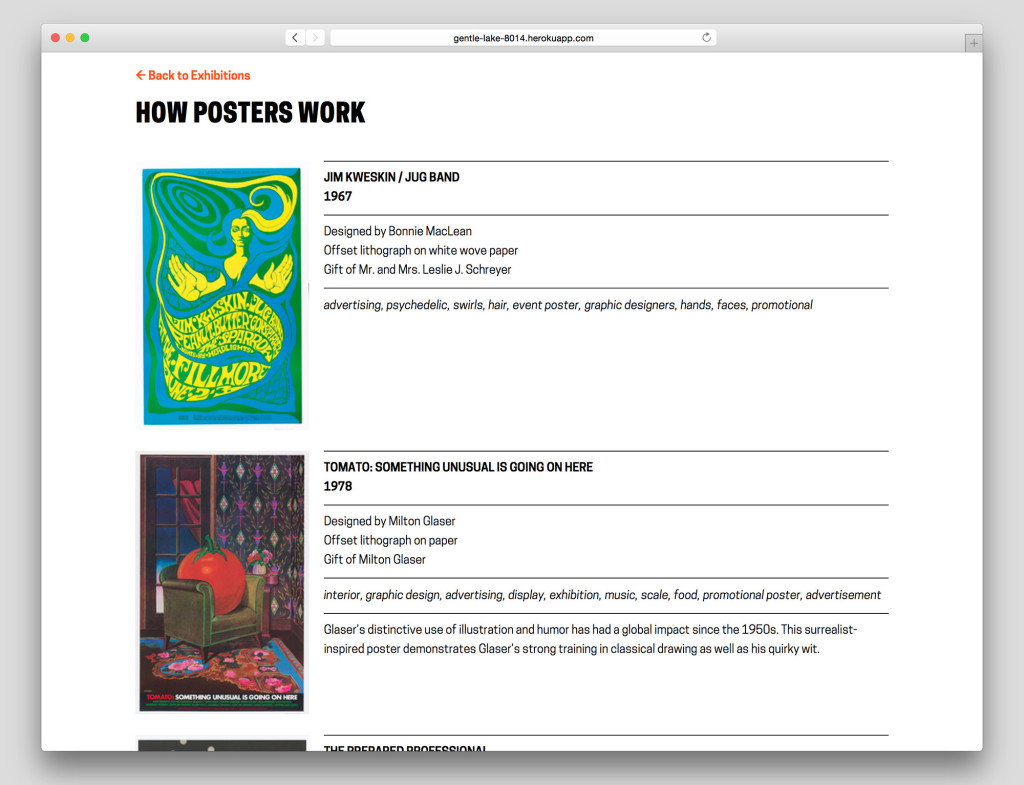

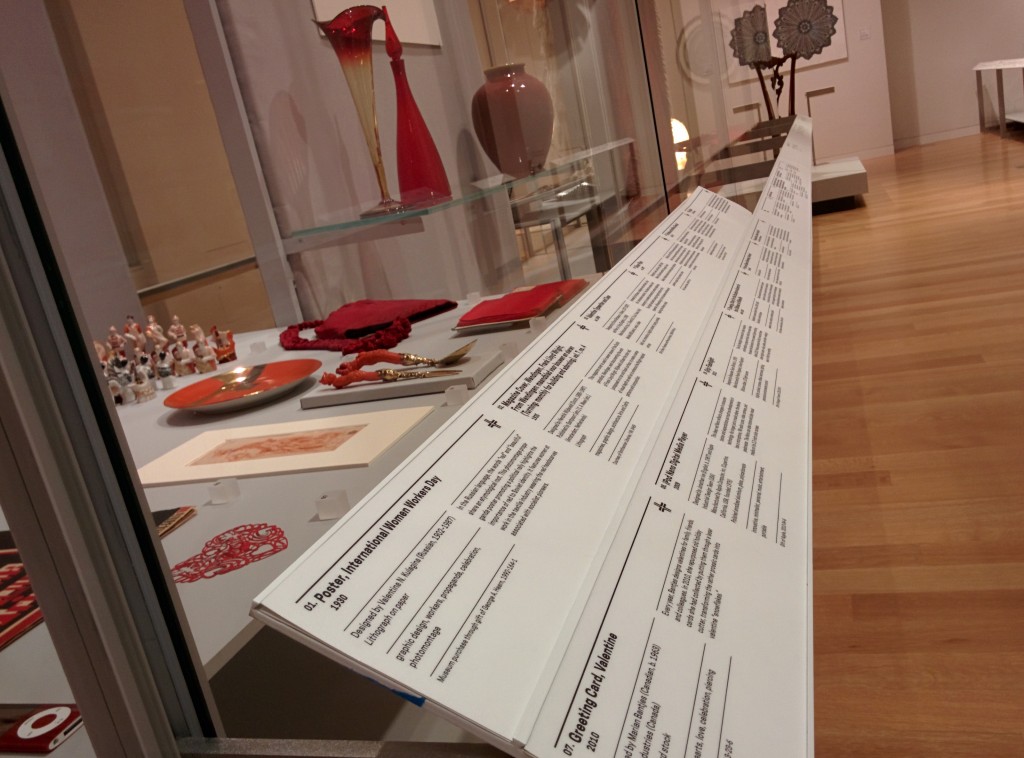

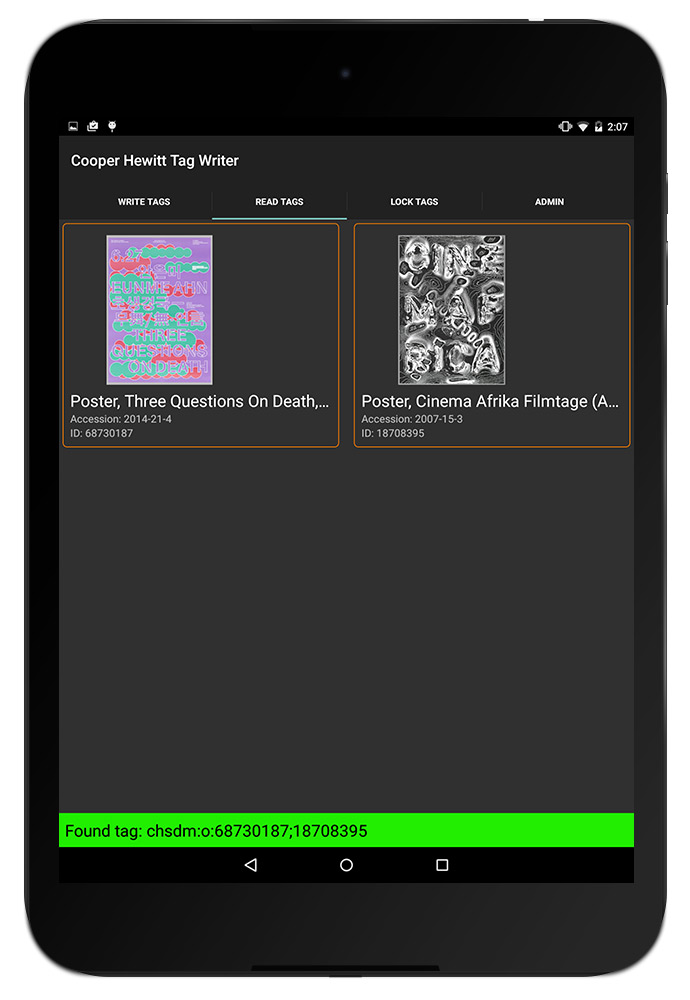

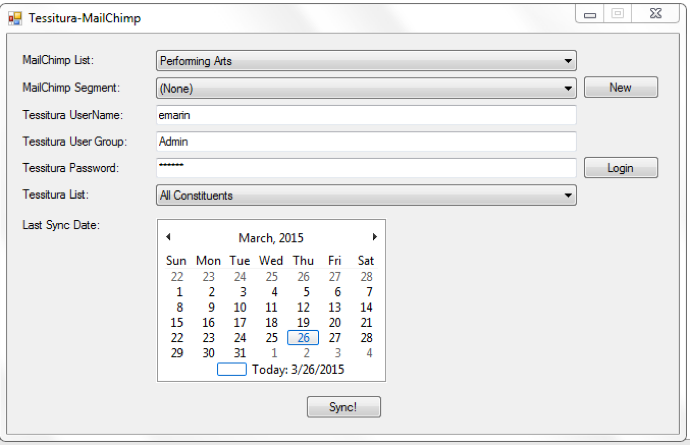

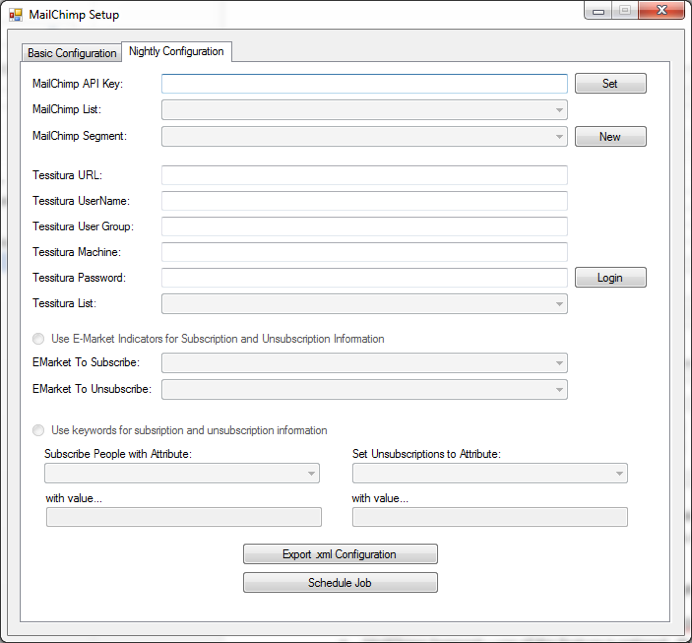

… and connect it with all of the power of Tessitura, through this easy to use tool.

The long version is:

It’s the last day of the Tessitura Learning & Community Conference, and I’m all checked out of my hotel, sitting in the conference hall, thinking about all of the things I’ve learned this week, and all of the people I’ve met.

So many of the people I’ve talked to have been asking about the Tessitura-Mailchimp Integration we launched in partnership with Mailchimp and JCA, Inc. this past week, and so I thought I’d write up a blog post to try and explain what it is, how you get it/use it/make it better, and more importantly, why we did it in the first place.

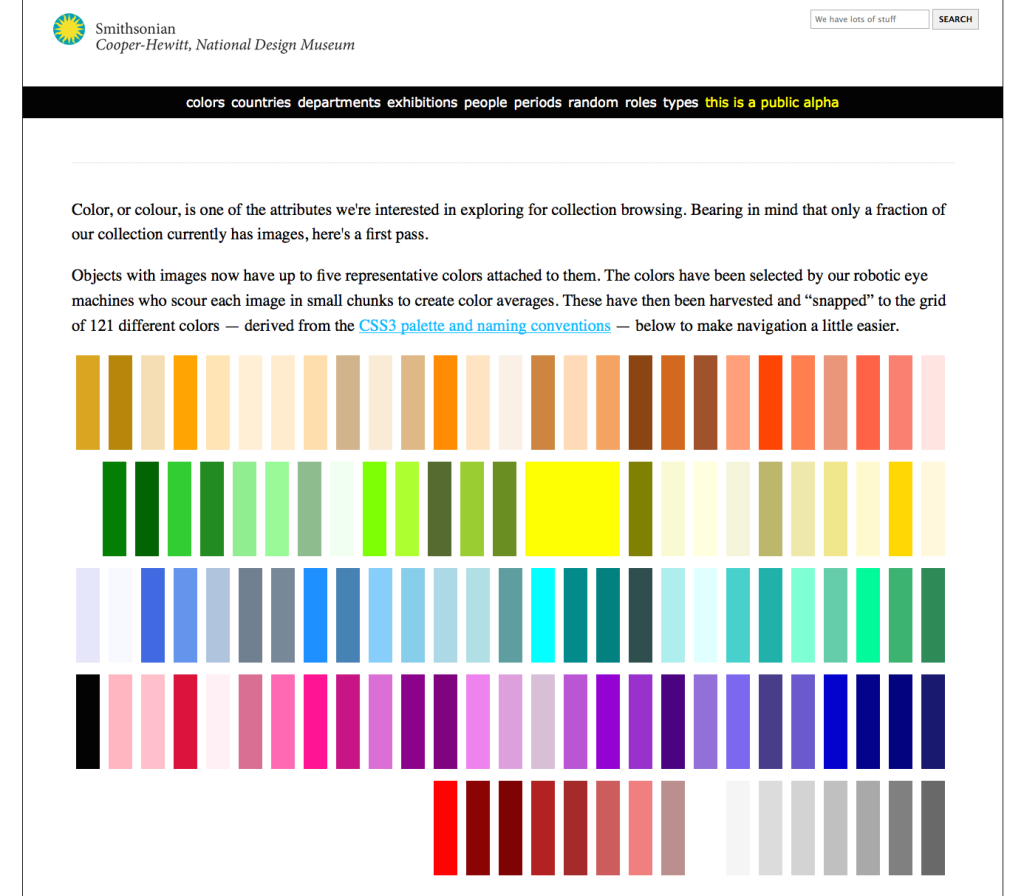

A long while ago, Cooper Hewitt had an enormous email list. Some 60K emails on one massive list powered by a e-marketing service that was clearly heading out of business. This giant list wasn’t working. We weren’t getting the results we thought we should, and what’s more, we had no way of measuring our success. So, we switched to Mailchimp. It was a pretty obvious choice. Mailchimp offered the museum an incredibly quick set up time, a beautiful user interface, with super clean and easy to use templates. What’s more, Mailchimp placed a lot of emphasis on “list quality” and advised us to put out an appeal to our current bloated list to do an “opt-in” and create a whole new list made up of real people, with valid email addresses, who actually wanted to receive mail from us.

The list dropped down. Way down. After a few “last chance” appeals, our 60K subscribers were whittled down to about 2500. This was challenging territory for many departments in the museum who relied on the large numbers for a sense of security more than their effectiveness, like at almost every non-profit.

But we pressed on, and noticed quickly that our open rates were dramatically high. Our click through rates were excellent, and it was clear that people were actually reading our emails, and acting on our calls to action. If you haven’t noticed by now, I’m trying to include as many marketing buzzwords into this post as possible. You know, due-dilligence and all.

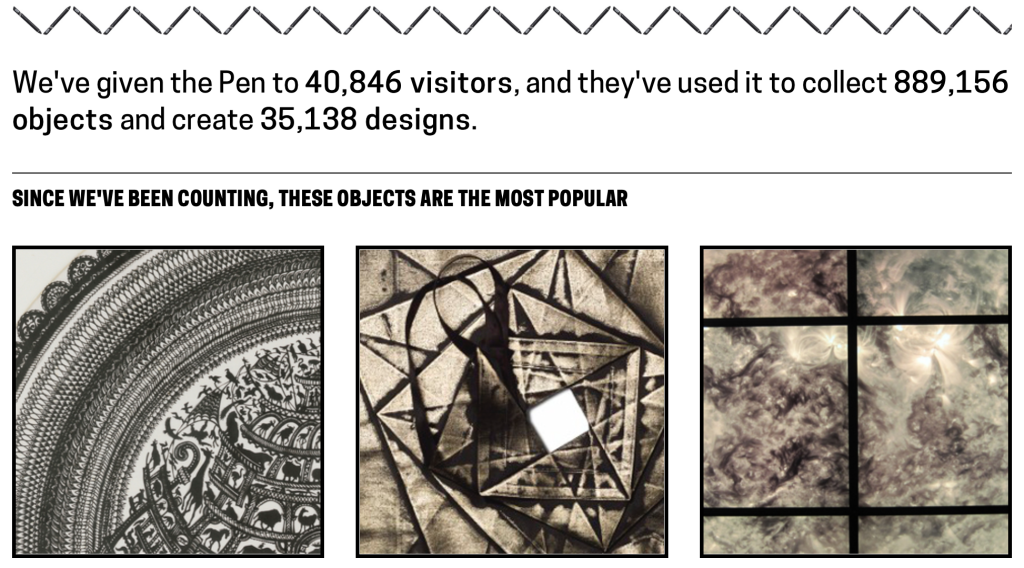

This is a long way around to explain that we all started to fall in love with Mailchimp. It’s ease of use and deep analytics and reporting tools were a huge win for the museum as a whole. Our list continues to grow and our “satisfaction” rating remains pretty steadily on the high end. The staff seem to enjoy working in Mailchimp, especially following the recent redesign of the user interface.

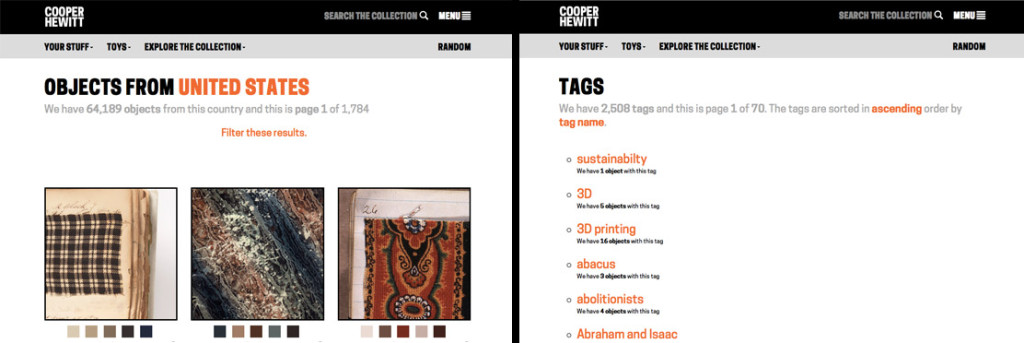

One day along the way, the museum decided to implement Tessitura as our CRM ( constituent relationship management ) and ticketing system. It’s a super robust, enterprise class system that is sort of the swiss army knife for non-profits, performing arts centers, and more recently, museums.

In the long term strategic plan for Tessitura, it appeared as though we would have to ditch Mailchimp and move to one of the two providers that offer an integration with Tessitura. We looked at both of them, and while they both did the job at hand, neither of them offered the pleasant experience and incredible analytics tools that Mailchimp did. It would have been a tough sell to our staff to move them off something they clearly all had grown to love and on to a system that would probably work just fine, but not make their hearts any warmer.

So, we talked with Mailchimp. Mailchimp has a wide variety of third party integrations, and we started to converse about what an integration with Tessitura would look like. We all got really excited at the possibilities, and so once a small amount of funding was secured, we partnered with JCA, Inc. to build us something.

Mailchimp was really excited about the idea, and being a forward thinking tech company, they pushed us to make the integration free, and open source. This is something we strongly believe in at Cooper Hewitt as well, so we worked with the staff at Tessitura, and figured out a way to share the code within the Tessitura Network, so as not to violate any non-disclosure agreements. Things were starting to take shape.

So what will it do, and how does it work?

We tried to limit the scope of the project to the bare essentials. We wanted to stay within our budget, and build a simple tool that does what it says on the tin. The hope here is that Tessiutra licensees will try it out, see that it’s a good thing, and run with it, adding features and customizing it to suit their needs. Open source goodness.

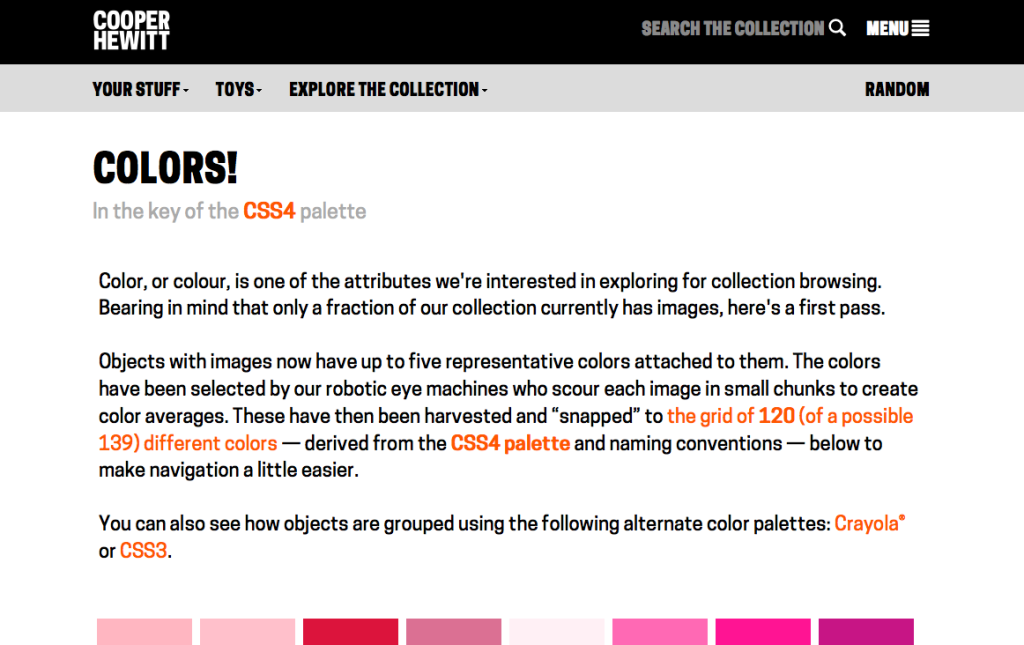

At the moment, the project is a pretty simple .NET application that anyone can install on a windows machine that can talk to Tessitura and Mailchimp. You fill out some initial config information, and then schedule a nightly synchronization job. This allows Tessitura licensees to export their primary lists on a nightly basis into Mailchimp.

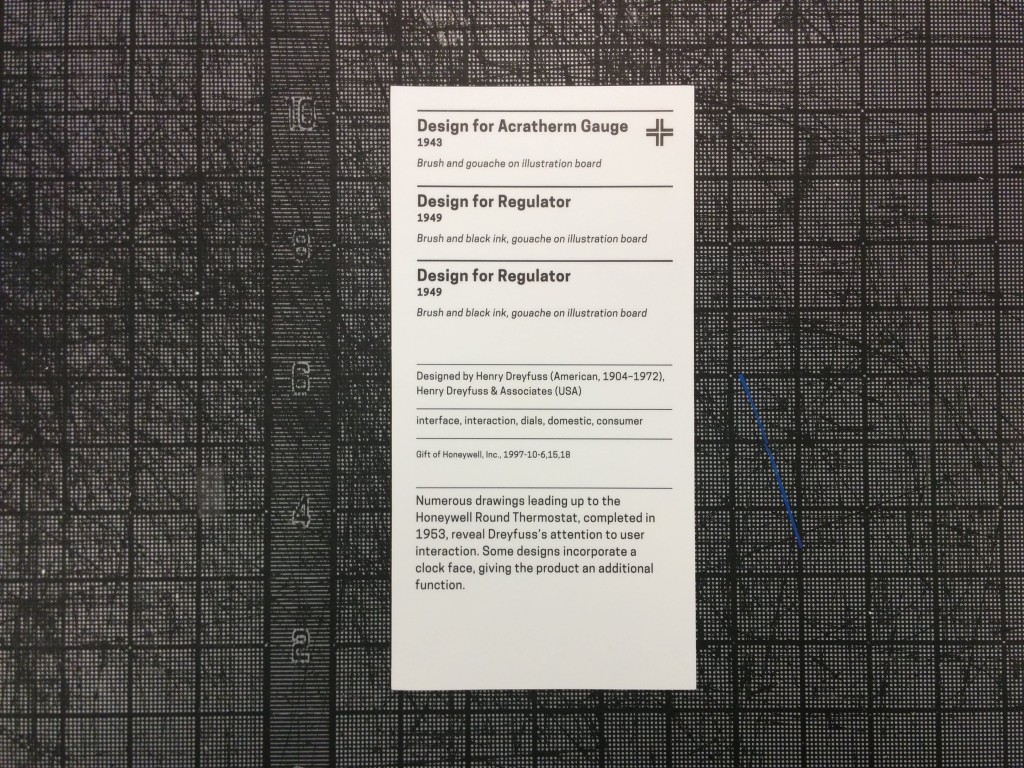

You can also perform synchronizations on an ad-hoc basis, meaning, any Tessitura user can easily create a segmented list in Tessitura for a specific purpose, and sync that list to Mailchimp for immediate sending.

This is a really nice feature, because it actually creates or updates a segment in Mailchimp. Rather than create many bespoke email lists, you can then just use a single master list in Mailchimp, and use the exported segments so you are only sending to the addresses you are interested in.

What it’s not

It’s important to understand that this is an open source tool and is provided “as is.” There is no support staff waiting to take your calls and answer your questions. This remains the responsibility of the Tessitura community.

As I mentioned, it’s a simple tool, and at the moment, it basically does the two functions I’ve outlined above. There is no syncing of analytics data back in to Tessitura, for example. We really love the analytics tools built right into Mailchimp, and so for most this may not be a deal breaker. These are the kinds of features we hope will get added by the community down the road.

What it is, again.

It’s a super exciting thing for us to all think about! The Tessitura community really needs to take more control over the entire eco-system of third-party applications and extensions. Without a vested interest in building our own tools, open sourcing the work we are all doing, and joining in the conversation with regards to direction and strategy, the community will always be waiting on the next update from those vendors who have chosen to build products from the system.

How do I get it?

First, you need to be a Tessitura Network licensee. Then, you just need access to the Tessitura Netowrk code sharing site on BitBucket. You can get this by sending an email to web_dev@tessituranetwork.com. Once you are there, you can go to here, and download the code, or the binaries to try it out on your system. The repository has a README with all the relevant info on how to install it from scratch, build from the source, and set things up in Mailchimp. If you don’t have this capability you can also download the compiled binaries and just try it out.

How do I contribute?

If you are a Tessitura Network licensee, and you’ve gotten this far, read the README to get the full picture on how to fork the code and contribute. For the time being, feel free to log issues, and send feature requests, and I will do my best to follow up on them and help get them resolved, but eventually, we hope that someone within the community will pick up the torch and help us to continue to develop what we think is a really valuable integration and option for the broader Tessitura community.

Reminder: First, you need to be a Tessitura Network licensee. Then, you just need access to the Tessitura Netowrk code sharing site on BitBucket. You can get this by sending an email to web_dev@tessituranetwork.com.