Now that I’ve written this blog post it occurs to me that it would be trivial to build something similar on top of the Cooper Hewitt Collections API — since that’s ultimately where all this colour stuff comes from — so I will probably do that shortly and stick in it the Play section.

That’s something I wrote last week on my personal weblog. I was writing about a little web “application” that I’d made to generate algorithmic “multiforms” that recall the work of the late painter Mark Rothko. The source of the colors used to create these robot-multiforms are derived from photo uploads and extracted using the same code that the Cooper Hewitt uses to generate color palettes for the objects in our collection. We wrote about that process last year.

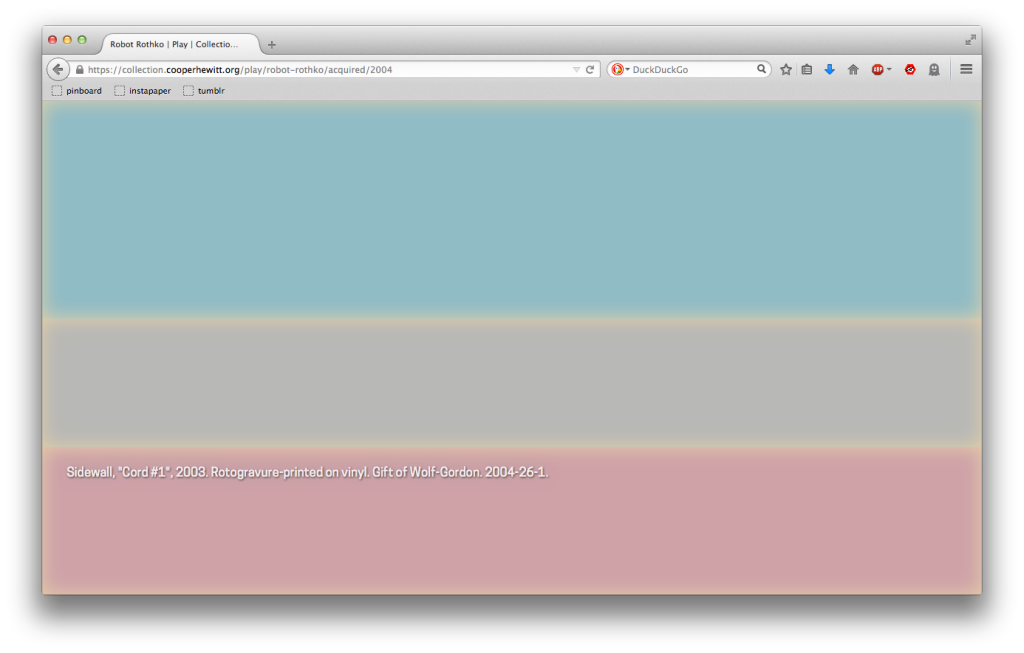

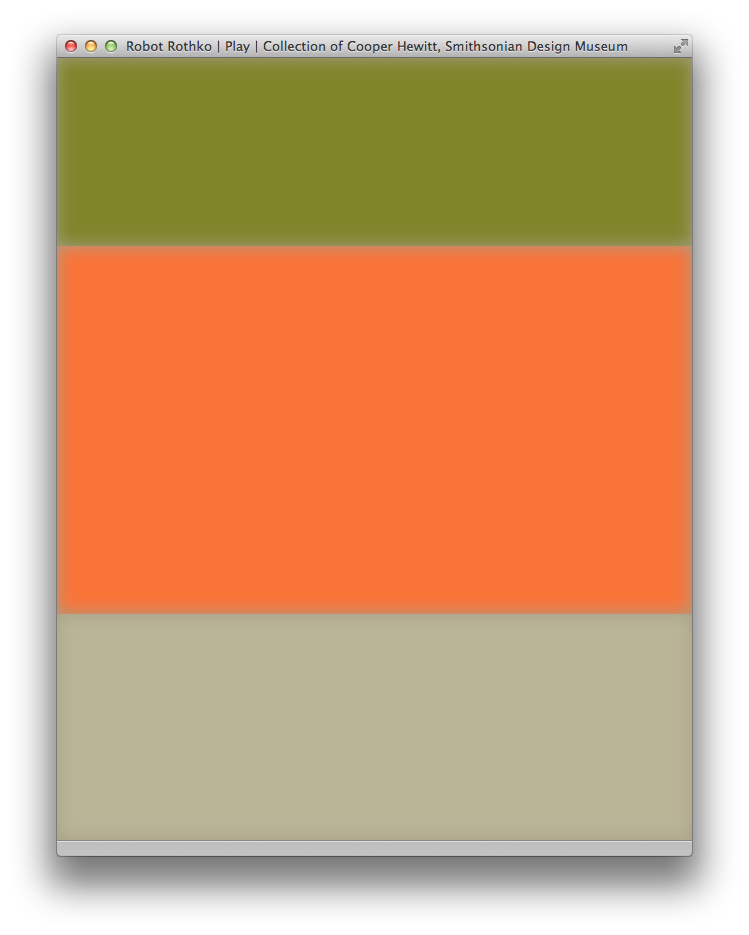

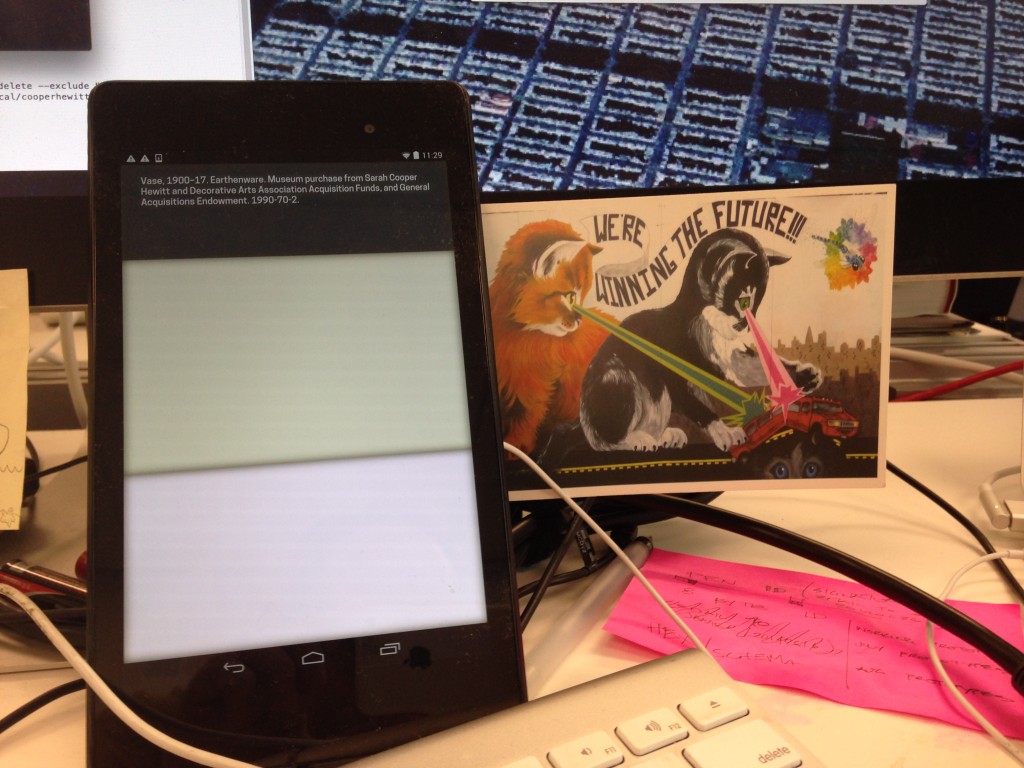

These robot “paintings” are built by fetching three photos and using their primary color to fill one of three stacked rectangles that make up the canvas. A dominant color for a fourth photo is used along with an inset CSS3 box-shadow to give the illusion a fuzzy, hazy background on which the rectangles sit. Every 60 seconds a new version is generated and the colors (and boxes) gently transition from old to new.

In that original blog post, I also wrote:

That’s it. It doesn’t do anything else and that’s part of the charm for me. It just sits in the background running in second-screen-mode stamping out robot-Rothko paintings. … It’s nice to have a new screen friend to spend the days the days with

They’re not really Rothko paintings, obviously, and to suggest that they are would do the painter a disservice. Rothko’s paintings are not just any random set of colors stacked on top of one another. Rothko worked long and hard to choose the arrangement of his paintings and it’s easy to imagine that he would have been horrified by some of the combinations that Robot Rothko offers up. But like the experimental Albers Boxes feature they are a nod and gesture – and a wink – towards the real thing.

Having gotten things working for a personal non-museum and not-really-for-strangers project I decided that it would be nice to do something similar for for the museum which is absolutely for everyone. So, today we are launching Robot Rothko which is exactly the same as the application described above except that it uses objects from our collection instead of photos as its source material. Like this:

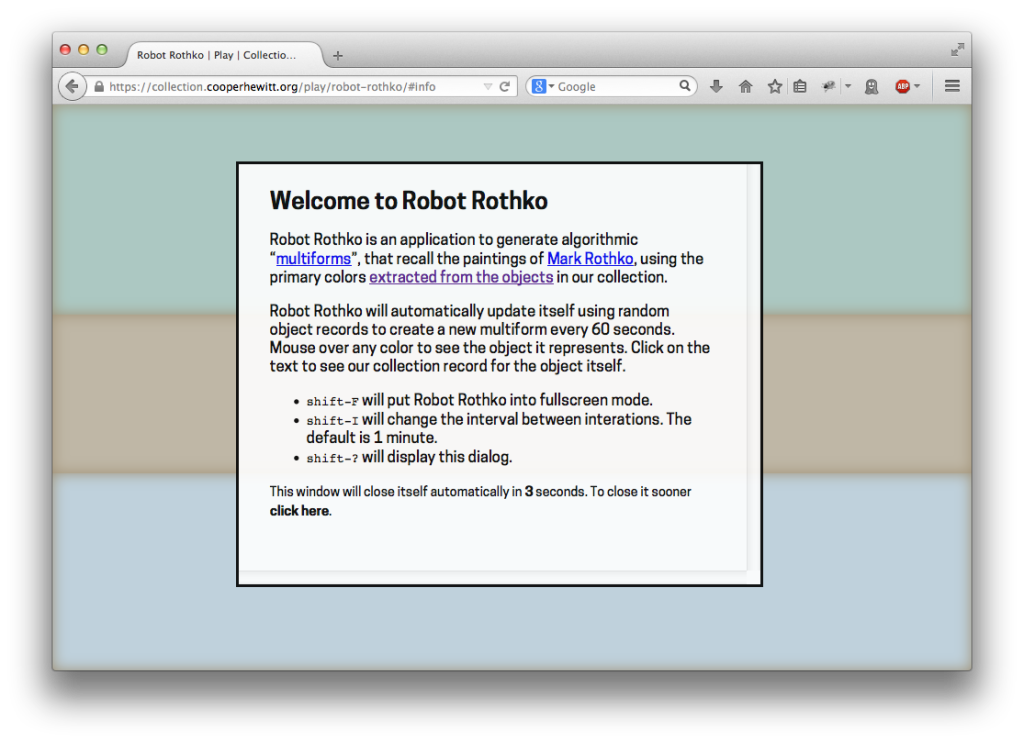

https://collection.cooperhewitt.org/play/robot-rothko/#info

See the #info part of that URL? That will cause the application to load with an information box that explaining what you’re looking at (and that will close itself automatically after 30 seconds). If you just want to jump straight to the application all you have to do is remove the #info from the URL.

https://collection.cooperhewitt.org/play/robot-rothko/

Robot Rothko will automatically update itself using random object records to create a new multiform every 60 seconds. Mouse over any color to see the object it represents. Click on the text to see our collection record for the object itself.

You can also filter stuff by person, decade. You can also filter by the year we acquired an object if you can guess where it is; that one still feels a little buggy so we’re going to hold off publishing the URL until we can figure out what’s wrong. Here are some examples of the first two:

https://collection.cooperhewitt.org/play/robot-rothko/people/18046041

https://collection.cooperhewitt.org/play/robot-rothko/decade/1910

Robot Rothko is native to the web which means it will work in any modern web browser whether it’s on your desktop or your phone or your tablet. It can be put it to fullscreen mode (by pressing shift-F) and if you save the website’s URL to your homescreen on your phone, or tablet, it is configured to launch without any of the usual browser chrome. If you use a Mac you can plug the URL for Robot Rothko in to Todd Ditchendorf’s handy Fluid.app which will turn it all in to a shiny desktop application. I am guessing there are equivalent tools for Windows or Linux but I don’t know what they are.

If you’d like to generate your own Robot Rothkos there’s an API method for doing just that:

https://collection.cooperhewitt.org/api/methods/cooperhewitt.play.robotRothko

And of course it works with our recently announced support for DSON as a response format:

curl -X GET 'https://api.collection.cooperhewitt.org/rest/?method=cooperhewitt.play.robotRothko&access_token=SEEKRET&person_id=18041501&format=dson'such "rothko" is such "canvas" is so "49" and "28" and "23" many and "palette" is so such "colour" is "#b8ab5b" , "id" is "18805769" , "epitaph" is "Folding Fan, 1900u201305. Medium: silk, wood, horn, metal, metal spangles. Gift of Lillian C. Hart. 1985-89-1." wow ? such "colour" is "#c7c7c7" . "id" is "18640557" ! "epitaph" is "Drawing, "Two Studies for Rectangul", ca. 1965. Pen and black ink on white wove paper. Gift of Vladimir Kagan. 1992-56-7." wow , such "colour" is "#db8952" , "id" is "18133219" , "epitaph" is "Fragment, mid-18th century. Medium: silknTechnique: plain weave patterned by supplementary warp floats and complementary weft floats. Gift of John Pierpont Morgan. 1902-1-811." wow many ? "background" is such "colour" is "#c7a9af" . "id" is "18761047" ! "epitaph" is "Booklet Cover Sheet, 1916. Color woodcut on lavender wove paper paper. Museum purchase from Drawings and Prints Council Fund and through gift of Margery and Edgar Masinter and Merrill C. Berman. 1999-50-1-3." wow wow , "filters" is so many and "stat" is "ok" wowRobot Rothko lives in a new section of the collections website called “Play“. The distinction between the Play section and the Experimental Features section of the website can probably be easiest thought of as: Experimental features are things that apply to the entirety of the collections website, while Play things are small contained applications that use the collections API and focus on or build off a particular aspect of the collection. The first of these was Sam Brenner’s SkyDesigner and Robot Rothko is actually the third such application.

In between those two was What Would Micah Say? (WWMS) a quick end-of-day project to test out the W3C’s Text-to-Speech APIs that are starting to appear in some web browsers (read: Chrome and Safari as of this writing, and make sure you have the volume turned up). The WWMS “application” was mostly a simple 20-minute exercise to test whether fetching some content dynamically and feeding to the text-to-speech APIs actually works and produces something useable. It does, which is very exciting because it opens up any number of accessibility-related improvements we can starting thinking about adding to the collections website.

That we happened to use the cooperhewitt.labs.whatWouldMicahSay API method and then configured the text-to-speech API to read his words as if spoken by a “French” robot made it all a little bit silly and a little more fun but those are important considerations. Because sometimes playing at – or making interesting – a technical problem is the best way to work through whether it is even worth pursuing in the first place.