Extract from “Outline map of New York Harbor & vicinity : showing main tidal flow, sewer outlets, shellfish beds & analysis points.”, New York Bay Pollution Commission, 1905. From New York Public Library.

Beneath our cities lies vast, labyrinthine sewer systems. These have been key infrastructures allowing our cities to grow larger, grow more densely, and stay healthy. Yet, save for passing interests in Urban Exploration (UrbEx), we barely think of them as ‘beautifully designed systems’. In their time, the original sewer systems were critical long term projects that greatly bettered cities and the societies they supported.

In some ways what the Labs has been working on over the past few years has been a similar infrastructure and engineering project which will hopefully be transformative and enabling for our institution as a whole. As SFMOMA’s recent post, which included an interview with Labs’ Head of Engineering, Aaron Cope, makes clear, our API and the collection site that it is built upon, is a carrier for a new type of institutional philosophy.

Underneath all our new shiny digital experiences – the Pen, the Immersion Room, and other digital experiences – as well as the refreshed ‘services layer’ of ticketing, Pen checkouts, and object label management, lies our API. There’s no readymade headline or Webby award awaiting a beautifully designed API – and probably there shouldn’t be. These things should just work and provide the benefit to their hosts that they promised.

So why would a museum burden itself with making an API to underpin all its interactive experiences – not just online but in-gallery too?

Its about sustainability. Sustainability of content, sustainability of the experiences themselves, and also, importantly, a sustainability of ‘process’. A new process whereby ideas can be tested and prototyped as ‘actual things’ written in code. In short, as Larry Wall said its about making “easy things easy and hard things possible”.

The overhead it creates in the short term is more than made up for in future savings. Where it might be prudent to take short cuts and create a separate database here, a black box content library there, the fallout would be unchanging future experiences unable to be expanded upon, or, critically, rebuilt and redesigned by internal staff.

Back at my former museum, then Powerhouse web manager Luke Dearnley, wrote an important paper on the reasons to make your API central to your museum back in 2011. There the API was used internally to do everything relating to the collection online but it only had minor impact on the exhibition floor. Now at Cooper Hewitt the API and exhibition galleries are tightly intertwined. As a result there’s a definite ‘API tax’ that is being imposed on our exhibition media partners – Local Projects and Tellart especially – but we believe it is worth it.

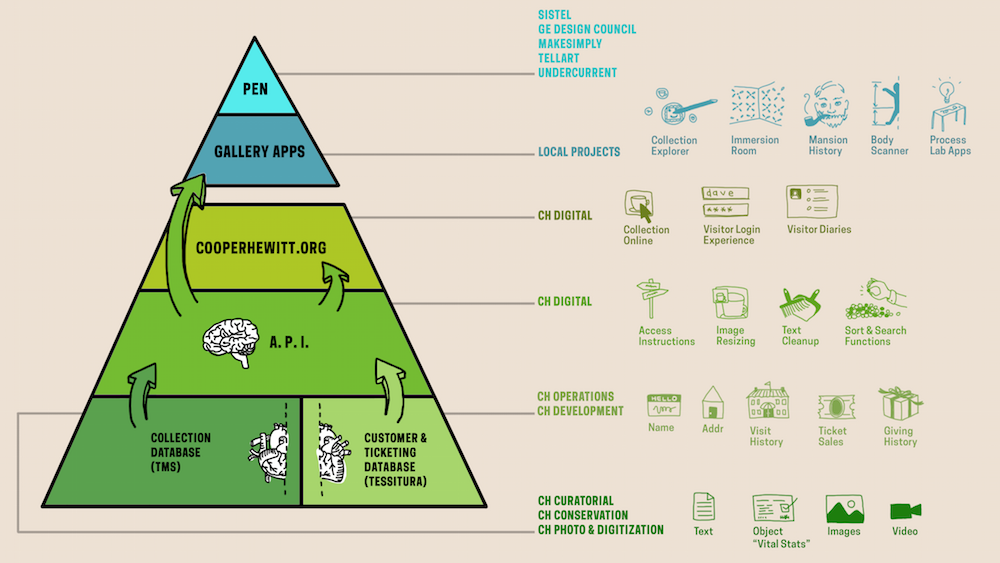

So here’s a very high level view of ‘the stack’ drawn by Labs’ Media Technologist, Katie.

Click to enlarge

At the bottom of the pyramid are the two ‘sources of truth’. Firstly, the collection management system into which is fed curatorial knowledge, provenance research, object labels and interpretation, public locations of objects in the galleries, and all the digitised media associated with objects, donors and people associated with the collection. There’s also now the other fundamental element – visitor data. Stored securely, Tessitura operates as a ticketing system for the museum and in the case of the API operates as an identity-provider where needed to allow for personalisation.

The next layer up is the API which operates as a transport between the web and both the collection and Tessitura. It also enables a set of other functions – data cleanup and programmatic enhancement.

Most regular readers have already seen the API – apart from TMS, the Collection Management System, it is the oldest piece of the pyramid. It went live shortly after the first iteration of the new collections website in 2012. But since then it has been growing with new methods added regularly. It now contains not only methods for collection access but also user authentication and account structures, and anonymised event logs. The latter of these opens up all manner of data visualization opportunities for artists and researchers down the track.

In the web layer there is the public website but also for internal museum users there are small web applications. These are built upon the API to assist with object label generation, metadata enhancement, and reporting, and there’s even an aptly-named ‘holodeck’ for simulating all manner of Pen behaviours in the galleries.

Above this are the two public-facing gallery layers. The application and interfaces designed and built on top of the API by Local Projects, the Pen’s ecosystem of hardware registration devices designed by Tellart, and then the Pen itself which operates as a simple user interface in its own right.

What is exciting is that all the API functionality that has been exposed to Local Projects and Tellart to build our visitor experience can also progressively be opened up to others to build upon.

Late last year students in the Interaction Design class at NYU’s ITP program spent their semester building a range of weird and wonderful applications, games and websites on top of the basic API. That same class (and the interested public in general) will have access to far more powerful functionality and features once Cooper Hewitt opens in December.

The API is here for you to use.

Pingback: Anwendungsporgrammierungen (APIs) für Museen

Pingback: The Museum of the Future Is Here * The New World

Pingback: How re-opening the museum enhanced our online collection: new views, new API methods | Cooper Hewitt Labs

Pingback: What is a Library / Librarian? – Godzilla should always be a dude in a rubber suit

Pingback: What is the Museum full stack? | Thinking about museums

Pingback: Print The Exhibition – The Label Book Generator | Cooper Hewitt Labs