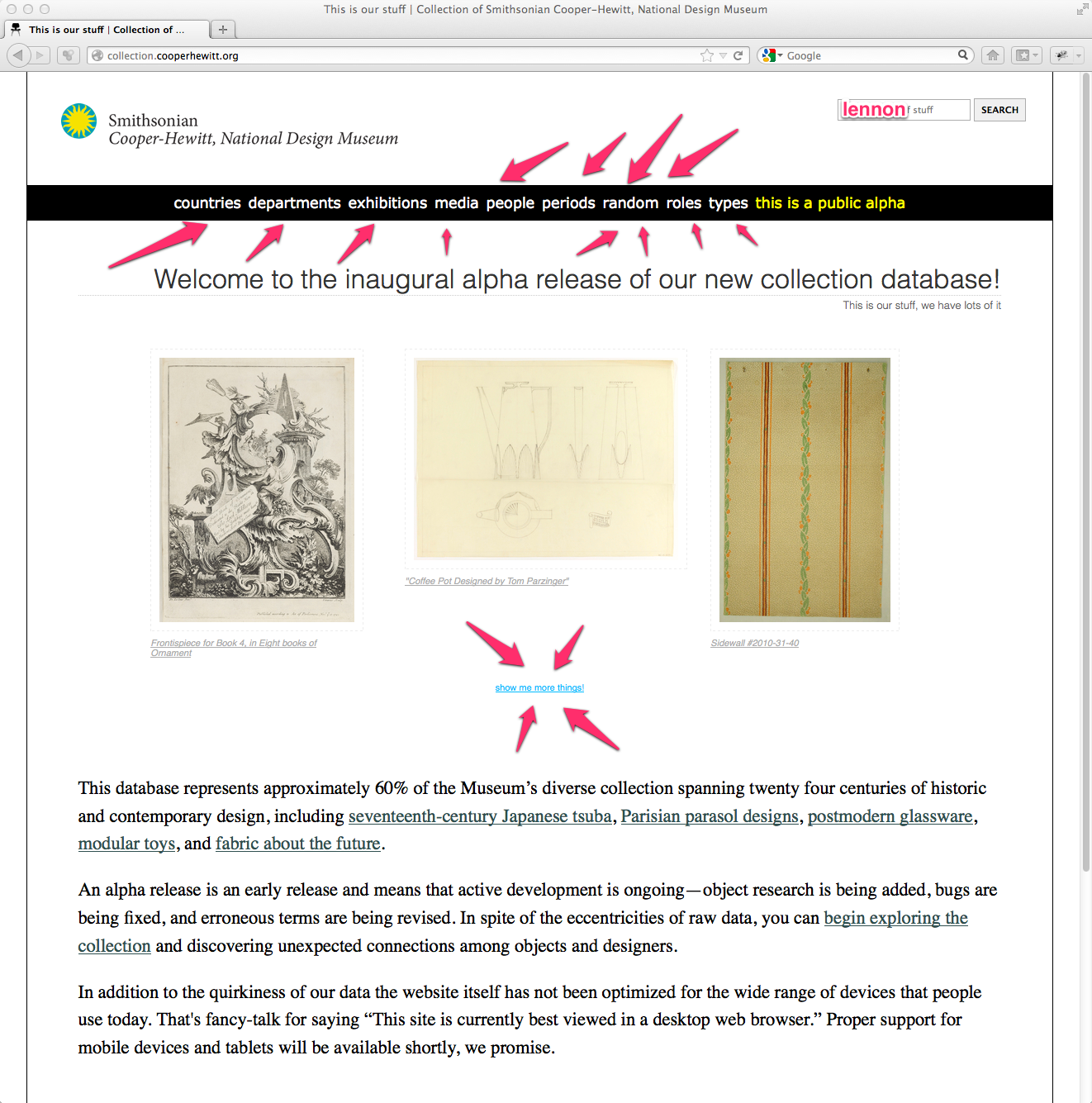

We made a new thing. It is a nascent thing. It is an experimental thing. It is a thing we hope other people will help us “kick the tires” around.

It’s called “Who’s on first?” or, more accurately, “solr-whosonfirst“. solr-whosonfirst is an experimental Solr 4 core for mapping person names between institutions using a number of tokenizers and analyzers.

How does it work?

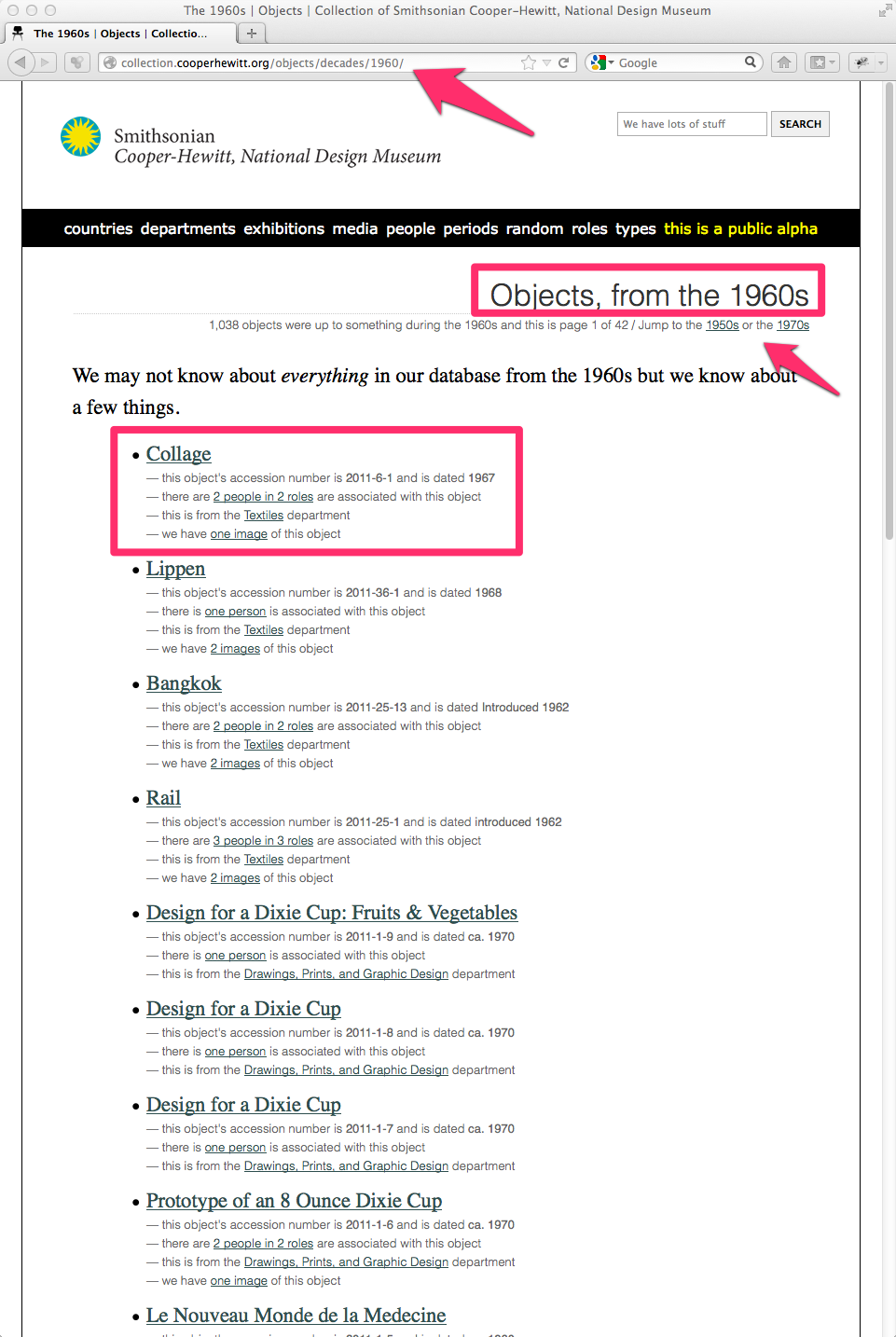

The core contains the minimum viable set of data fields for doing concordances between people from a variety of institutions: collection; collection_id; name and when available year_birth; year_death.

The value of name is then meant to copied (literally, using Solr copyField definitions) to a variety of specialized field definitions. For example the name field is copied to a name_phonetic so that you can query the entire corpus for names that sound alike.

Right now there are only two such fields, both of which are part of the default Solr schema: name_general and name_phonetic.

The idea is to compile a broad collection of specialized fields to offer a variety of ways to compare data sets. The point is not to presume that any one tokenizer / analyzer will be able to meet everyone’s needs but to provide a common playground in which we might try things out and share tricks and lessons learned.

Frankly, just comparing the people in our collections using Solr’s built-in spellchecker might work as well as anything else.

For example:

$> curl 'https://localhost:8983/solr/select?q=name_general:moggridge&wt=json&indent=on&fq=name_general:bill'

{"response":{"numFound":2, "start":0,"docs":[

{

"collection_id":"18062553" ,

"concordances":[

"wikipedia:id= 1600591",

"freebase:id=/m/05fpg1"],

"uri":"x-urn:ch:id=18062553" ,

"collection":"cooperhewitt" ,

"name":["Bill Moggridge"],

"_version_":1423275305600024577},

{

"collection_id":"OL3253093A" ,

"uri":"x-urn:ol:id=OL3253093A" ,

"collection":"openlibrary" ,

"name":["Bill Moggridge"],

"_version_":1423278698929324032}]

}

}

Now, we’ve established a concordance between our record for Bill Moggridge and Bill’s author page at the Open Library. Yay!

Here’s another example:

$> https://localhost:8983/solr/whosonfirst/select?q=name_general:dreyfuss&wt=json&indent=on

{"response":{"numFound":3,"start":0,"docs":[

{

"concordances":["ulan:id=500059346"],

"name":["Dreyfuss, Henry"],

"uri":"x-urn:imamuseum:id=656174",

"collection":"imamuseum",

"collection_id":"656174",

"year_death":[1972],

"year_birth":[1904],

"_version_":1423872453083398149},

{

"concordances":["ulan:id=500059346",

"wikipedia:id=1697559",

"freebase:id=/m/05p6rp",

"viaf:id=8198939",

"ima:id=656174"],

"name":["Henry Dreyfuss"],

"uri":"x-urn:ch:id=18041501",

"collection":"cooperhewitt",

"collection_id":"18041501",

"_version_":1423872563648397315},

{

"concordances":["wikipedia:id=1697559",

"moma:id=1619"],

"name":["Henry Dreyfuss Associates"],

"uri":"x-urn:ch:id=18041029",

"collection":"cooperhewitt",

"collection_id":"18041029",

"_version_":1423872563567656970}]

}

}

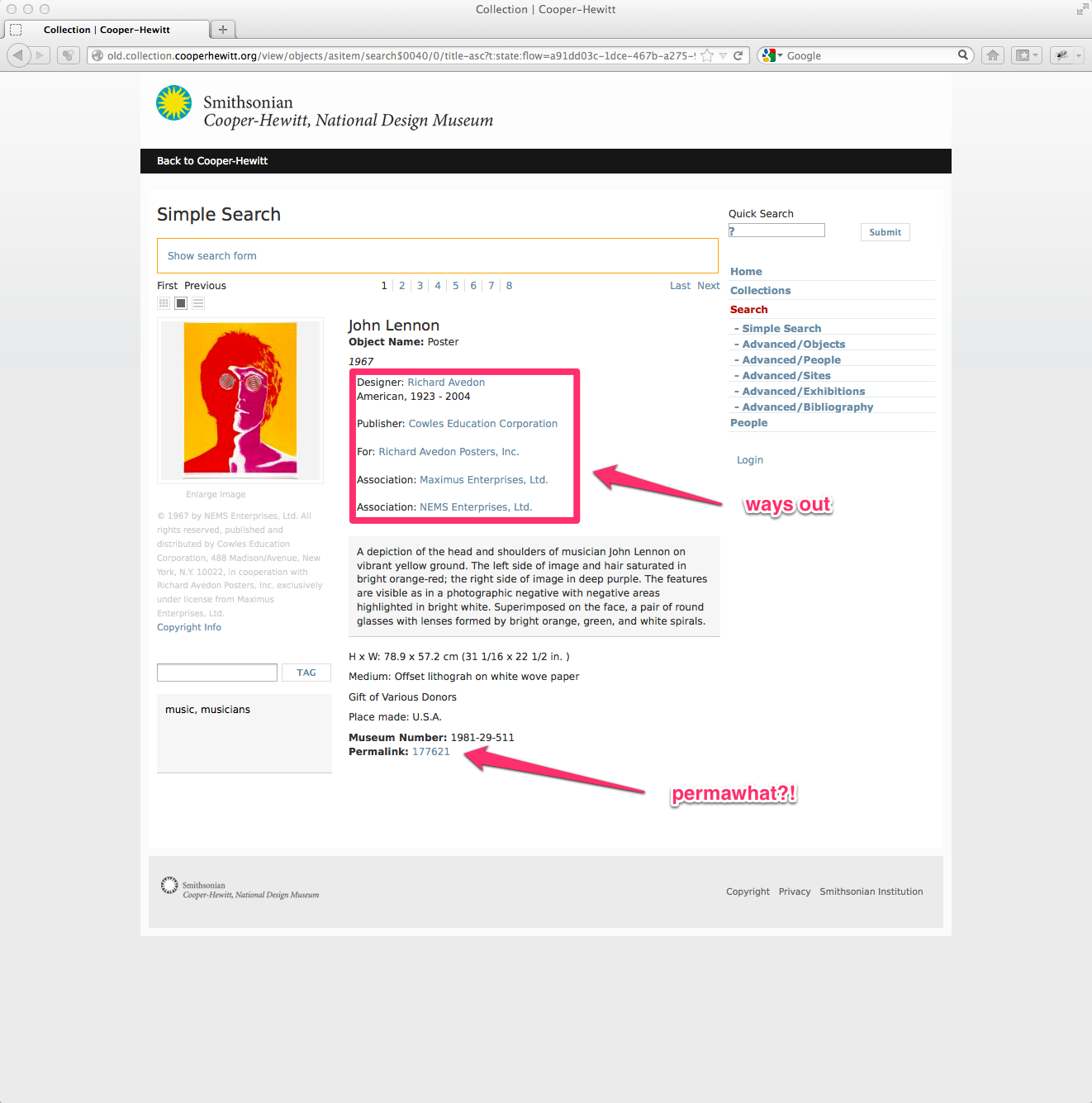

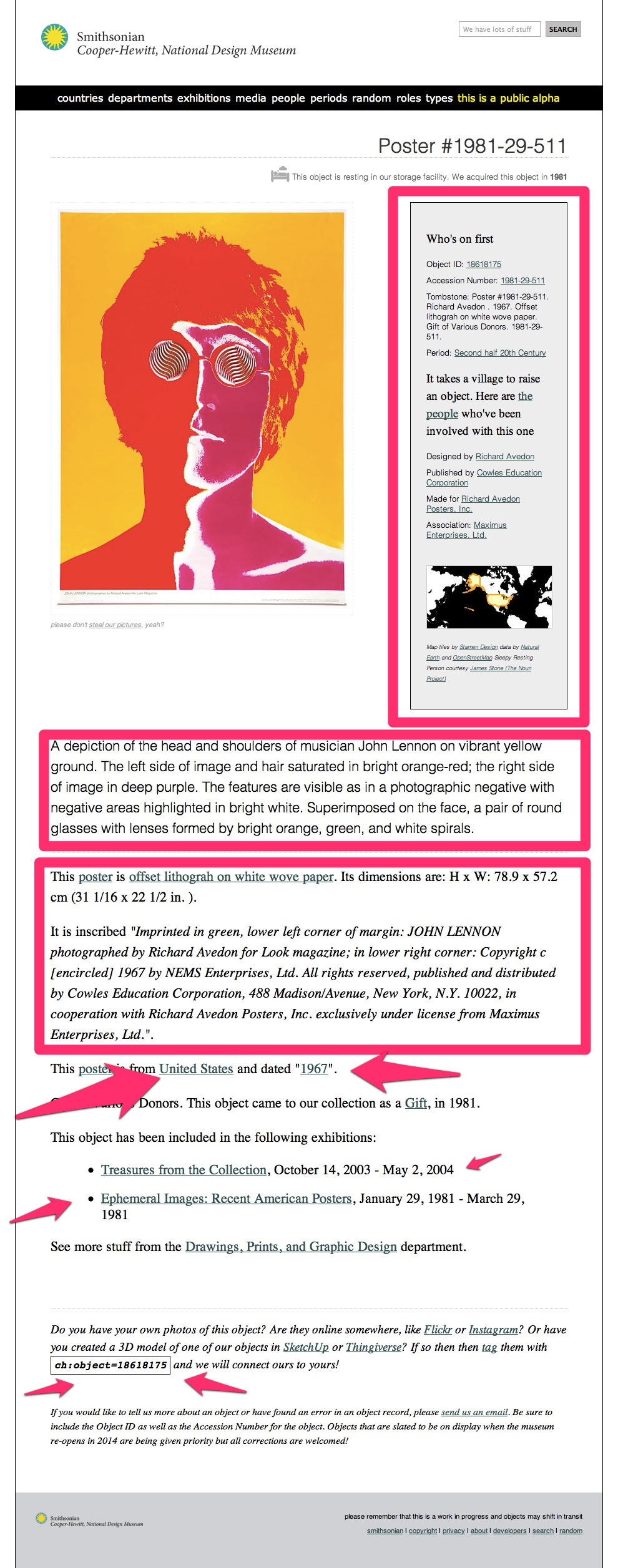

See the way the two records for Henry Dreyfuss, from the Cooper-Hewitt, have the same concordance in Wikipedia? That’s an interesting wrinkle that we should probably take a look at. In the meantime, we’ve managed to glean some new information from the IMA (Henry Dreyfuss’ year of birth and death) and them from us (concordances with Wikipedia and Freebase and VIAF).

The goal is to start building out the some of the smarts around entity (that’s fancy-talk for people and things) disambiguation that we tend to gloss over.

None of what’s being proposed here is all that sophisticated or clever. It’s a little clever and my hunch tells me it will be a good general-purpose spelunking tool and something for sanity checking data more than it will be an all-knowing magic pony. The important part, for me, is that it’s an effort to stand something up in public and to share it and to invite comments and suggestions and improvements and gentle cluebats.

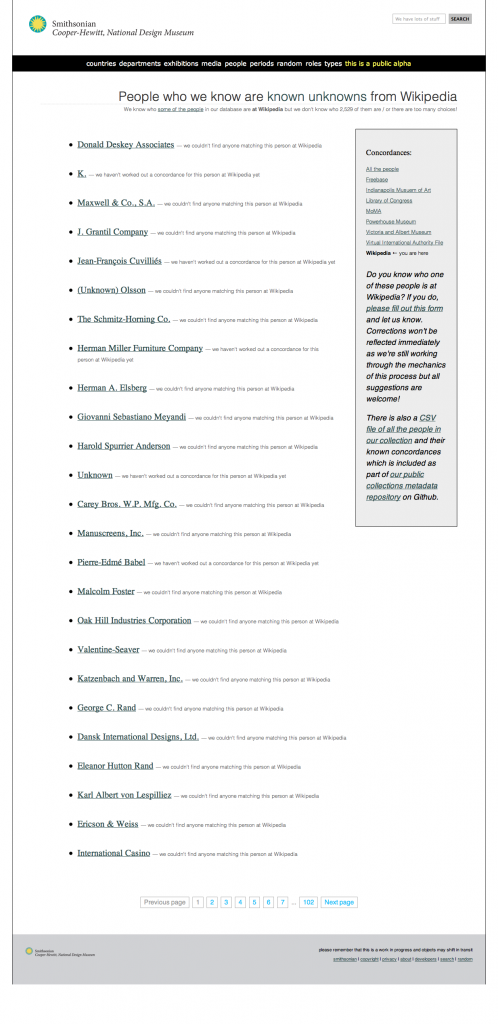

Concordances (and machine tags)

There are also some even-more experimental and very much optional hooks for allowing you to store known concordances as machine tags and to query them using the same wildcard syntax that Flickr uses, as well as generating hierarchical facets.

I put the machine tags reading list that I prepared for Museums and the Web in 2010, on Github. It’s a good place to start if you’re unfamiliar with the subject.

Download

There are two separate repositories that you can download to get started. They are:

The first is the actual Solr core and config files. The second is a set of sample data files and import scripts that you can use to pre-seed your instance of Solr. Sample data files are available from the following sources:

-

The Open Library (specifically their list of authors)

-

The Walker Art Center

The data in these files is not standardized. There are source specific tools for importing each dataset in the bin directory. In some cases the data here is a subset of the data that the source itself publishes. For example, the Open Library dataset only contains authors and IDs since there are so many of them (approxiamately 7M).

Additional datasets will be added as time and circumstances (and pull requests) permit.

Enjoy!